At the AMS Annual Meeting panel on Peer Review last January, journal editors Tony Broccoli, Carolyn Reynolds, Walt Robinson, and Jeff Rosenfeld spoke about how authors and reviewers together make good reviews happen:

Robinson: If you want good reviews, and by good I mean insightful and constructive and that are going to help you make your paper better, the way to do that is to write a really good paper. Make sure your ducks are in a row before you send it in. You should have read over that and edited it multiple times. I’m going to, at some point in my life, write a self-help book in which the single word title is, “Edit!” because it applies to many parts of life. Have your colleagues—not even the co-authors—look at it. Buy the person in the office next door a beer to look over the paper and get their comment. There may be problems with the science–and none of our science is ever perfect–but if it’s a really well constructed, well formulated, well written paper, that will elicit really good reviews.

The flip side of that is, if the paper is indecipherable, you’ll get a review back saying, “I’m trying to figure this out” with a lot of questions, and often it’s major revisions. (We don’t reject that many things out of the box.)

The problem is, the author goes back and finally makes the paper at a standard he or she should have sent in the first time. It goes back to the reviewer, and then the reviewer understands the paper and comes back and starts criticizing the science. Then the author gets angry…”You didn’t bring that up the first time!” Well, that’s because the reviewer couldn’t understand the science the first time. So, if you want good, constructive reviews, write good papers!

Reynolds: You want to make things as easy as possible for the reviewers. Make the English clear, make the figures clear. Allow them to focus on the really important aspects.

Broccoli: I would add, affirming what Walt said, that the best reviews constructively give the authors ideas for making their papers better. Some reviewers are comfortable taking the role as the gatekeeper and trying to say whether this is good enough to pass muster. But then maybe they aren’t as strong as need be at explaining what needs to be done to make the paper good enough. The best reviews are ones that apply high standards but also try to be constructive. They’re the reviewers I want to go back to.

Rosenfeld: I like Walt’s word, “Edit.” Thinking like an editor when you are a reviewer has a lot to do with empathy. In journals, generally, the group of authors is identical or nearly the same as the group of readers, so empathy is relatively easy. It’s less true in BAMS, but it still applies. You have to think like an editor would, “What is the author trying to do here? What is the author trying to say? Why are they not succeeding? What is it that they need to show me?” If you can put yourself in the shoes of the author—or in the case of BAMS, in the shoes of the reader—then you’re going to be able to write an effective review that we can use to initiate a constructive conversation with the author.

Broccoli: That reminds me: Occasionally we see a reviewer trying to coax the author into writing the paper the reviewer would have written, and that’s not the most effective form of review. It’s good to have diverse approaches to science. I would rather the reviewer try to make the author’s approach to the problem communicated better and more sound than trying to say, “This is the way you should have done it.”

Jeff

Jeff

Peer Review Week 2017: 1. Looking for Reviewers

It’s natural that AMS–an organization deeply involved in peer review–participates in Peer Review Week 2017. This annual reflection on peer review was kicked off today by the International Congress of Peer Review and Scientific Publication in Chicago. If you want to follow the presentations there, check out the videos and live streams.

Since peer review is near and dear to AMS, we’ll be posting this week about peer review, in particular the official international theme, “Transparency in Review.”

To help bring some transparency to peer review, AMS Publications Department presented a panel discussion on the process in January at the 2017 AMS Annual Meeting in Seattle. Tony Broccoli, longtime chief editor of the Journal of Climate, was the moderator; other editors on the panel were Carolyn Reynolds and Yvette Richardson of Monthly Weather Review, Walt Robinson of the Journal of Atmospheric Sciences, and Jeff Rosenfeld of the Bulletin of the American Meteorological Society.

You can hear the whole thing online, but we’ll cover parts of the discussion here over the course of the week.

For starters, a lot of authors and readers wonder where editors get peer reviewers for AMS journal papers. The panel offered these answers (slightly edited here because, you know, that’s what editors do):

Richardson: We try to evaluate what different types of expertise are needed to evaluate a paper. That’s probably the first thing. For example, if there’s any kind of data assimilation, then I need a data assimilation expert. If the data assimilation is geared toward severe storms, then I probably need a severe storms expert too. First I try to figure that out.

Sometimes the work is really related to something someone else did, and that person might be a good person to ask. Sometimes looking through what papers they are citing can be a good place to look for reviewers.

And then I try to keep reaching out to different people and keep going after others when they turn me down…. Actually, people are generally very good about agreeing to do reviews and we really have to thank them. It would all be impossible without that.

Reynolds: If you suggest reviewers when you submit to us, I’ll certainly consider them. I usually won’t pick just from the reviewers suggested by the authors. I try to go outside that group as well.

Broccoli: I would add, sometimes if there’s a paper on a topic where there are different points of view, or the topic is yet to be resolved, it can be useful to identify as at least one of the reviewers someone who you know may have a different perspective on that topic. That doesn’t mean you’re going to believe the opinion of that reviewer above the opinion of others but it can be a good way of getting a perspective on a topic.

Rosenfeld: Multidisciplinary papers can present problems for finding the right reviewers. For these papers, I do a lot of literature searching and hunt for that key person who happens to somehow intersect, or be in between disciplines or perspectives; or someone who is a generalist in some way, whose opinion I trust. It’s a tricky process and it’s a double whammy for people who do that kind of research because it’s hard to get a good evaluation.

• • •

If you’re interested in becoming a reviewer, the first step is to let AMS know. For more information read the web page here, or submit this form to AMS editors.

Disaster Do-Overs

Ready to do it all over again? Fresh on the heels of a $100+ billion hurricane, we very well may be headed for another soon.

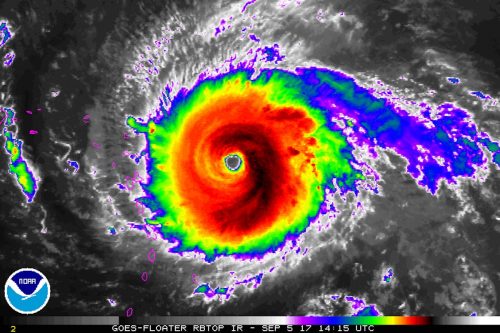

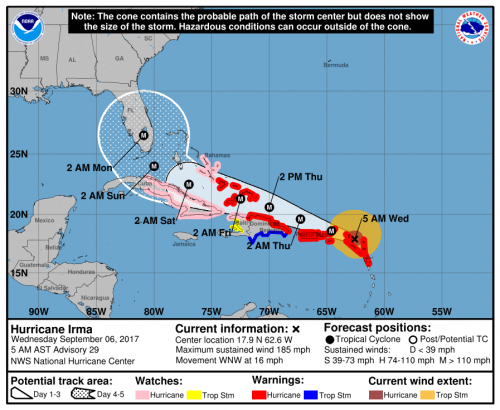

As Houston and the Gulf Coast begin a long recovery from Hurricane Harvey, Hurricane Irma is now rampaging through the Atlantic. With 185 m.p.h. sustained winds on Tuesday, Irma became the strongest hurricane in Atlantic history outside of the Caribbean and Gulf. The hurricane made its first landfall early Wednesday in Barbuda and still threatens the Virgin Islands, Puerto Rico, Cuba, and the United States.

If Irma continues along the general path of 1960’s Hurricane Donna, it could easily tally $50 billion in damage. This estimate, from a study by Karen Clark and Co. (discussed recently on Category 6 Blog), is already four years old (i.e., too low). Increased building costs—which the report notes rise “much faster” than inflation–and continued development could drive recovery costs even higher.

In short, as bad as Houston is suffering, there are do-overs on the horizon—a magnitude of repeated damage costs unthinkable not long ago, before Katrina ($160 million) and Sandy ($70 million).

Repeated megadisasters yield lessons, some of them specific to locale and circumstances. In Miami after Hurricane Andrew, the focus was on building codes as well as the variability of the winds within the storms. After Hurricane Rita, the focus was on improving policies on evacuation. After Hurricane Katrina, while the emergency management community reevaluated its response, the weather community took stock of the whole warnings process. It was frustrating to see that, even with good forecasts, more than a thousand people lost their lives. How could observations and models improve? How could the message be clarified?

Ten years after Katrina, the 2016 AMS Annual Meeting in New Orleans convened a symposium on the lessons of that storm and of the more recent Hurricane Sandy (2012). A number of experts weighed in on progress since 2005. It was clear that challenges remained. Shuyi Chen of the University of Miami, for example, highlighted the need for forecasts of the impacts of weather, not just of the weather itself. She urged the community to base those impacts forecasts on model-produced quantitative uncertainty estimates. She also noted the need for observations to initialize and check models that predict storm surge, which in turn feeds applications for coastal and emergency managers and planners. She noted that such efforts must expand beyond typical meteorological time horizons, incorporating sea level rise and other changes due to climate change.

These life-saving measures are part accomplished and part underway—the sign of a vigorous science enterprise. Weather forecasters continue to hone their craft with so many do-overs. Some mistakes recur. As NOAA social scientist Vankita Brown told the AMS audience about warnings messages at the 2016 Katrina symposium, “Consistency was a problem; not everyone was on the same page.” Katrina presented a classic problem where the intensity of the storm, as measured in the oft-communicated Saffir-Simpson rating, was not the key to catastrophe in New Orleans. Mentioning categories can actually create confusion. And again, in Hurricane Harvey this was part of the problem with conveying the threat of the rainfall, not just the wind or storm surge. Communications expert Gina Eosco noted that talk about Harvey being “downgraded” after landfall drowned out the critical message about floods.

Hurricane Harvey poses lessons that are more fundamental than the warnings process itself and are eerily reminiscent of the Hurricane Katrina experience: There’s the state of coastal wetlands, of infrastructure; of community resilience before emergency help can arrive. Houston, like New Orleans before it, will be considering development practices, concentrations of vulnerable populations, and more. There are no quick fixes.

In short, as AMS Associate Executive Director William Hooke observes, both storms challenge us to meet the same basic requirement:

The lessons of Houston are no different from the lessons of New Orleans. As a nation, we have to give priority to putting Houston and Houstonians, and others, extending from Corpus Christi to Beaumont and Port Arthur, back on their feet. We can’t afford to rebuild just as before. We have to rebuild better.

All of these challenges, simple or complex, stem from an underlying issue that the Weather Channel’s Bryan Norcross emphatically delineated when evaluating the Katrina experience back in 2007 at an AMS Annual Meeting in San Antonio:

This is the bottom line, and I think all of us in this business should think about this: The distance between the National Hurricane Center’s understanding of what’s going to happen in a given community and the general public’s is bigger than ever. What happens every time we have a hurricane—every time–is most people are surprised by what happens. Anybody who’s been through this knows that. People in New Orleans were surprised [by Katrina], people in Miami were surprised by Wilma, people [in Texas] were surprised by Rita, and every one of these storms; but the National Hurricane Center is very rarely surprised. They envision what will happen and indeed something very close to that happens. But when that message gets from their minds to the people’s brains at home, there is a disconnect and that disconnect is increasing. It’s not getting less.

Solve that, and facing the next hurricane, and the next, will get a little easier. The challenge is the same every time, and it is, to a great extent, ours. As Norcross pointed out, “If the public is confused, it’s not their fault.”

Hurricanes Harvey and Katrina caused catastrophic floods for different reasons. Ten years from now we may gather as a weather community and enumerate unique lessons of Harvey’s incredible deluge of rain. But the bottom line will be a common challenge: In Hurricane Harvey, like Katrina, a city’s–indeed, a nation’s–entire way of dealing with the inevitable was exposed. Both New Orleans and Houston were disasters waiting to happen, and neither predicament was a secret.

Meteorologists are constantly getting do-overs, like Irma. Sooner or later, Houston will get one, too.

Improving Tropical Cyclone Forecasts

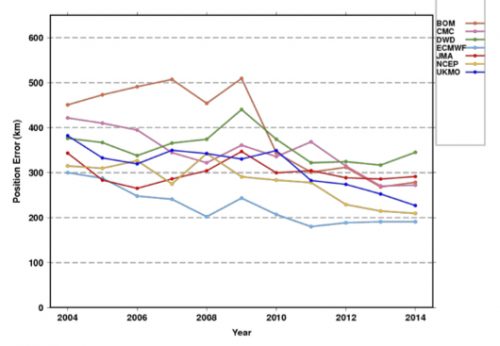

Tropical cyclones are usually associated with bad news, but a long-term study about these storms now posted online for publication in BAMS has some good news–about the forecasts, at least. The authors, a Japanese group lead by Munehiko Yamaguchi, studied operational global numerical model forecasts of tropical cyclones since 1991.

Their finding: the model forecasts of storm positions have improved in the last 25 years. In the Western North Pacific, for example, lead time is two-and-a-half days better. Across the globe, errors in 1 – 5 day forecasts dipped by 6 to 14.5 km, depending on the basin.

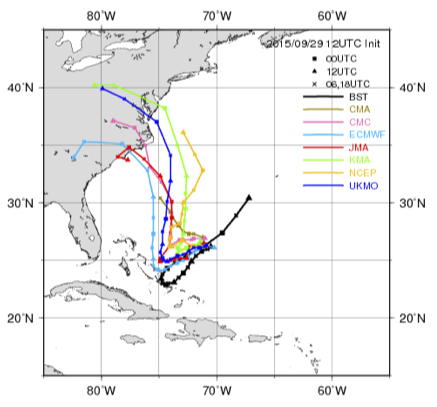

Here are the improvements for the globe as a whole. Each line is a different modeling center:

While position forecasts with a single model are getting better (not so much with intensity forecasts), it seems natural that the use of a consensus of the best models could improve results even more. But Yamaguchi et al. say that’s not true in every ocean basin. The result is not enhanced in the Southern Indian Ocean, for example. The authors explain:

This would be due to the fact that the difference of the position errors of the best three NWP centers is large rather than comparable with each other and thus limits the impact of a consensus approach.

The authors point towards ways to improve tropical cyclone track forecasts, because not all storms behave the same:

while the mean error is decreasing, there still exist many cases in which the errors are extremely large. In other words, there is still a potential to further reduce the annual average TC position errors by reducing the number of such large-error cases.

For example, take 5-day track forecasts for Hurricane Joaquin in 2015. Hard to find a useful consensus here (the black line is the eventual track):

Yamaguchi et al. note that these are types of situations that warrant more study, and might yield the next leaps in improvement. They note that the range of forecast possibilities now mapped as a “cone of uncertainty” could be improved by adapting to specific situations:

For straight tracks, 90% of the cyclonic disturbances would lie within the cone, but only 39% of the recurving or looping tracks would be within the cone. Thus a situation-dependent track forecast confidence display would be clearly more appropriate.

Check out the article for more of Yamaguchi et al.’s global perspective on how tropical cyclone forecasts have improved, and could continue to improve.

Whose Flood Is It, Anyway?

When water-laden air lifts up the eastern slope of the Rockies, enormous thunderstorms and catastrophic flooding can develop. Americans may remember well the sudden, deadly inundation of Boulder, Colorado, in September 2013. For Canadians, however, the big flood that year was in Alberta.

Four years ago this week, 19-23 June 2013, a channel of moist air jetted westward up the Rockies and dumped a foot of rain on parts of Alberta, Canada. The rains eventually spread from thunderstorms along the slopes to a broader stratiform shield. Five people died and 100,000 fled their homes, many in Calgary. At more than $5 billion damage, it was the costliest natural disaster in Canadian history until last year’s Fort McMurray fire.

While we might call it a Canadian disaster, the flood had equally American origins. A new paper in early on-line release for the Journal of Hydrometeorology shows why.

The authors—Yangping Li of the University of Saskatchewan and a team of others from Canadian institutions—focused mostly on how well such mountain storms can be simulated in forecast modeling. But they also traced the origins of the rain water. Local snowmelt and evaporation played a “minor role,” they found. “Overall, the recycling of evaporated water from the U.S. Great Plains and Midwest was the primary source of moisture.”

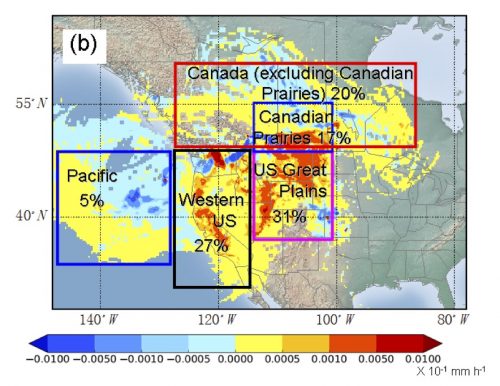

Here is what the distribution of sources looked like. The colors show net moisture uptake from 6 hours to 7 days before the storm:

Some of the water came from as far east as the Great Lakes, and more than half from the United States. While storms along the eastern slopes of the Rockies often get Gulf of Mexico moisture, in this case, Gulf air had already dumped its moisture on the U.S. Plains. In other words, the soaked Plains merely recycled Gulf moisture back into the air to be carried into Canada.

American floods, Canadian floods, and any combination thereof—Li et al. remind us of the cross-border interdependence of weather, water, and climate … a relationship not just for this week but for the future:

The conditions of surface water availability (e.g. droughts) or agricultural activities over the US Great Plains could exert indirect but potentially significant effects on the development of flood-producing rainfall events over southern Alberta. Future land use changes over the US Great Plains together with climate change could potentially influence these extreme events over the Canadian Prairies.

For more perspectives on this noteworthy flood, take a look at another new paper in early online release–Milrad et al. in Monthly Weather Review–or at the companion papers to the Journal of Hydrometeorology paper: Kochtubajda et al. (2016) and Liu et al. (2016) in Hydrological Processes.

Cruising the Ocean’s Surface Microlayer

Oceans are deep, and they are integral to the climate system. But the exchanges between ocean and atmosphere that preoccupy many scientists are not in the depths but instead in the shallowest of shallow layers.

A lot happens in the topmost millimeter of the ocean, a film of liquid called the “sea-surface microlayer that is, in many ways, a distinct realm. At this scale, exchanges with the atmosphere are more about diffusion, conduction, and viscosity than turbulence. But the layer is small and difficult to observe undisturbed and over sufficient areas. As a result, “it has been widely ignored in the past,” according to a new paper by Mariana Ribas-Ribas and colleagues in the Journal of Atmospheric and Oceanic Technology.

Nonetheless, Ribas-Ribas and her team, based in Germany, looked for a new way to skim across and sample the critical top 100 micrometers (one tenth of a millimeter) of the ocean. This surface microlayer (SML) “plays a central role in a range of global biogeochemical and climate-related processes.” However, Ribas-Ribas et al. add,

The SML often has remained in a distinct research niche, primarily because it was thought that it did not exist in typical oceanic conditions; furthermore, it is challenging to collect representative SML samples under natural conditions.

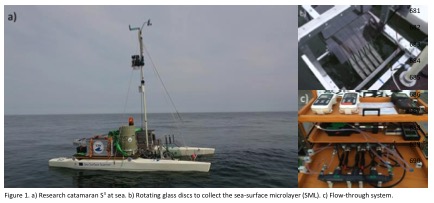

In their paper (now in early online release), the authors report on their solution to observing is a newly outfitted remote-controlled catamaran. A set of rotating glass discs with holes scoops up water samples. Pictured below are the catamaran and (at left, top) the glass discs mounted between the hulls and (bottom left) the flow-through system.

Catamarans are not new to this research, but they were generally towed behind other vessels and subject to wake effects or were specialized. The new Sea Surface Scanner (S3) takes advantage of better remote control and power supply technology and can pack multiple sampling and sensors and controls onto one platform. Tests in the Baltic Sea last year showed the ability of S3 to track responses of organisms in the surface microlayer to ocean fronts, upwelling areas, and rainfall. The biological processes in turn affect critical geochemical processes like exchanges of gases and production of aerosols for the atmosphere.

The technology may be a fresh start for research looking in depth at the shallowest of layers. See the journal article for more details on the S3 and its performance in field tests.

Withdrawal from the Paris Agreement Flouts the Climate Risks

by Keith Seitter, AMS Executive Director

President Trump’s speech announcing the U.S. withdrawal from the Paris Climate Agreement emphasizes his assessment of the domestic economic risks of making commitments to climate action. In doing so the President plainly ignores so many other components of the risk calculus that went into the treaty in the first place.

There are, of course, political risks, such as damaging our nation’s diplomatic prestige and relinquishing the benefits of leadership in global economic, environmental, or security matters. But from a scientific viewpoint, it is particularly troubling that the President’s claims cast aside the extensively studied domestic and global economic, health, and ecological risks of inaction on climate change.

President Trump put it quite bluntly: “We will see if we can make a deal that’s fair. And if we can, that’s great. And if we can’t, that’s fine.”

The science emphatically tells us that it is not fine if we can’t. The American Meteorological Society Statement on Climate Change warns that it is “imperative that society respond to a changing climate.” National policies are not enough — the Statement clearly endorses international action to ensure adaptation to, and mitigation of, the ongoing, predominately human-caused change in climate.

In his speech, the President made a clear promise “… to be the cleanest and most environmentally friendly country on Earth … to have the cleanest air … to have the cleanest water.” AMS members have worked long and hard to enable such conditions both in our country and throughout the world. We are ready to provide the scientific expertise the nation will need to realize these goals. AMS members are equally ready to provide the scientific foundation for this nation to thrive as a leader in renewable energy technology and production, as well as to prepare for, respond to, and recover from nature’s most dangerous storms, floods, droughts, and other hazards.

Environmental aspirations, however, that call on some essential scientific capabilities but ignore others are inevitably misguided. AMS members have been instrumental in producing the sound body of scientific evidence that helps characterize the risks of unchecked climate change. The range of possibilities for future climate—built upon study after study—led the AMS Statement to conclude, “Prudence dictates extreme care in accounting for our relationship with the only planet known to be capable of sustaining human life.”

This is the science-based risk calculus upon which our nation’s climate change policy should be based. It is a far more realistic, informative, and actionable perspective than the narrow accounting the President provided in the Rose Garden. It is the science that the President abandoned in his deeply troubling decision.

When Art Is a Matter of (Scientific) Interpretation

We’ve seen plenty of examples of scientists inspiring art at AMS conferences. It is also true that art can inspire scientists, as in the kick-off press conference at this week’s European Geophysical Union General Assembly in Vienna, Austria.

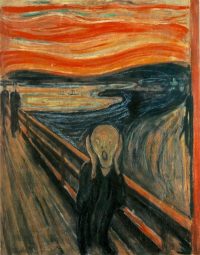

A team of scientists came forward with a new hypothesis about the origins of one of the icons of Western art–Edvard Munch’s The Scream. Since 1892, the man melting down on a bridge under a wavy, blood-red Oslo sunset has been a pillar of the modern age precisely because it expresses interior mentality more than objective observation. Or so art history tells us.

A team of scientists came forward with a new hypothesis about the origins of one of the icons of Western art–Edvard Munch’s The Scream. Since 1892, the man melting down on a bridge under a wavy, blood-red Oslo sunset has been a pillar of the modern age precisely because it expresses interior mentality more than objective observation. Or so art history tells us.

To be fair, some art historians also have made clear that there are honest clouds in Munch’s painting. In a 1973 monograph, the University of Chicago’s Reinhold Heller acknowledged Munch’s “faithfulness to meteorological and topographical phenomena” in a precursor canvas, called Despair. Even so, Heller went on to say that Munch’s vision conveyed “truthfulness solely in its reflection of the man’s mood.”

Take a Khan Academy course on the history of art and you’ll learn that Munch was experiencing synesthesia—“a visual depiction of sound and emotion….The Scream is a work of remembered sensation rather than perceived reality.”

Leave it to physical scientists, then, to remind us that nature, as an inspiration for artists, is far stranger than art historians imagine. Indeed, faced with The Scream, scientists have been acting just like scientists: iterating through hypotheses about what the painting really shows.

In a 2004 article in Sky and Telescope magazine, Russell Doescher, Donald Olson, and Marilynn Olson argued that Munch’s vision was inspired by sunsets inked red after the eruption of Krakatau in 1882.

More recently, atmospheric scientists have debunked the volcanic hypothesis and posited alternatives centered on specific clouds. In his 2014 book on the meteorological history of art, The Soul of All Scenery, Stanley David Gedzelman points out that the mountains around Oslo could induce sinuous, icy wave clouds with lingering tint after sunset. The result would be brilliant undulations very much like those in the painting.

At EGU this week, Svein Fikke, Jón Egill Kristjánsson, and Øyvind Nordli contend that Munch was depicting much rarer phenomenon: nacreous, or “mother of pearl,” clouds in the lower stratosphere. They make their case not only at the conference this week, but also in an article just published in the U.K. Royal Meteorological Society’s magazine, Weather.

Munch never revealed exactly when he saw the sunset that startled him. As a result, neither cloud hypothesis is going to be confirmed definitively.

Indeed, to a certain extent, both cloud hypotheses rest instead on a matter of interpretation about the timing of the painting amongst Munch’s works, about his diary, and other eyewitness accounts.

The meteorology, in turn, is pretty clear: The Scream can no longer be seen as solely a matter of artistic interpretation.

Washington Forum to Explore Working with the New Administration

by Keith Seitter, AMS Executive Director

The AMS Washington Forum, held each spring, is organized by the Board on Enterprise Economic Development within the Commission on the Weather, Water, and Climate Enterprise. It brings together leaders from the public, private, and academic sectors for productive dialogue on issues of relevance to the weather, water, and climate enterprise in this country. Compared to our scientific conferences, it is a small meeting with typically a little more than 100 participants. This allows for a meeting dominated by rich discussion rather than presentations. The Forum takes advantage of being held in Washington, D.C., with panel discussions featuring congressional and executive branch staff, as well as agency leadership. It is no secret that this is one of my favorite meetings of the year, and for many in the atmospheric and related sciences community, the Washington Forum has become a “can’t miss” event on their calendar.

The 2017 Washington Forum will be held May 2–4, 2017 at the AAAS Building, 1200 New York Avenue, Washington, DC. (Note that this year’s Forum occurs later in the year than usual.) The organizing committee has put together an outstanding program again this year under the timely, and perhaps provocative, theme: “Evolving Our Enterprise: Working Together with the New Administration in a New Collaborative Era.” The transition to a new administration is bringing changes in department and agency leadership that directly impact our community. The Forum will provide a terrific opportunity to explore how the community can collaboratively navigate these changes in ways to ensure continued advancement of the science and services for the benefit of the nation. I am expecting three days of very lively discussion.

We have a special treat this year in conjunction with the Forum. On the afternoon before the Forum formally begins, Monday, May 1, the Forum location at the AAAS Building will host the second Annual Dr. James R. Mahoney Memorial Lecture. The lecture honors the legacy of Mahoney (1938–2015), AMS past-president and a leader in the environmental field in both the public and private sectors, having worked with more than 50 nations and served as NOAA Deputy Administrator in addition to other key government posts. The Mahoney Lecture is cosponsored by AMS and NOAA, and the annual lecture is presented by a person of stature in the field who can address a key environmental science and/or policy issue of the day. We are very pleased to announce that Richard H. Moss, senior scientist at Pacific Northwest National Laboratory’s Joint Global Change Research Institute and adjunct professor in the Department of Geographical Sciences at the University of Maryland, College Park, will deliver the second Mahoney Lecture. The lecture will begin at 4:00 p.m. and will be followed by a reception. The lecture is free and does not require registration to attend.

If you have ever thought about attending the Washington Forum but have not yet done so, this would be a great year to give it a try. We do limit attendance because of space constraints and the desire for this meeting to have a lot of audience participation and discussion, so I would encourage you to register early. You can learn more about the Forum, and register to attend, at the Forum website.

(Note: This letter also appears in the March 2017 issue of BAMS.)

Field Tests for the New GOES-16

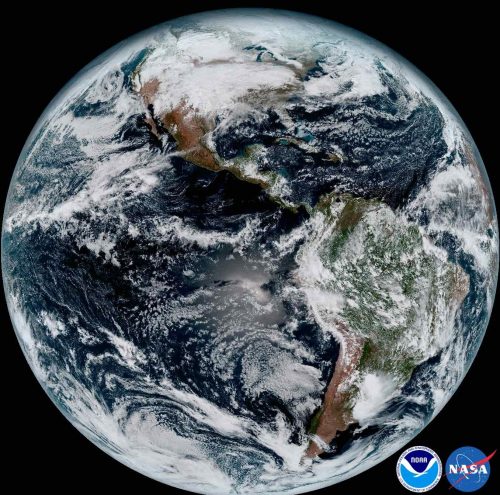

“Who ever gets tired of looking at this thing?” asked Steve Goodman of an appreciative audience when he presented a slide of the new imagery from GOES-16 at the 97th AMS Annual Meeting this January.

The answer was clearly, “Nobody.”

The images from GOES-16 have been dazzling, but the hard work of maximizing use of the satellite is ongoing, especially for Goodman’s agency, the National Environmental Satellite, Data, and Information Service (NESDIS).

The successful launch in November was a major step for the weather community. Compared to the older geostationary satellites, the new technology aboard GOES-16 offers a huge boost in the information influx: 3x improvements in spectral observing, a 4x spatial resolution advantage, and 5x temporal sampling upgrades. But new capabilities mean new questions to ask and tests to perform.

The satellite is barely up in space and already NOAA is targeting its performance for a major scientific study. Last week was the official start of a three-month study by NESDIS to “fine-tune” the data flowing from our new eye in space.

You can learn more about the GOES-16 Field Campaign in the presentation that Goodman gave at the Annual Meeting. He pointed out that it has been 22 years since the imager was updated, and that the satellite also includes the Global Lightning Mapper (GLM), which is completely new to space.

“We thought it would be good, getting out of the gate, to collect the best validation data that we can,” Goodman said.

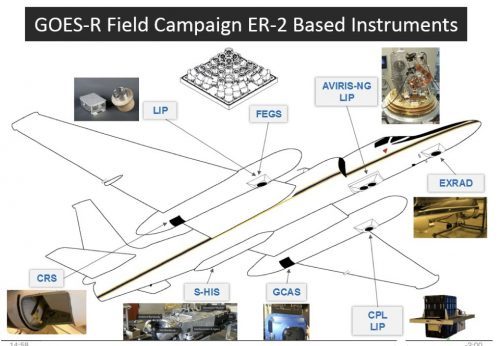

Over a period of 6 weeks, the NASA ER-2 high-altitude jet will fly 100 hours in support of the studies. The flights will be based first from California and then in Georgia, well-timed to coordinate with the tornado field campaign, VORTEX-SE. All the while, the airplane’s downward-looking sensors need to be aimed to match the angle of observation of the satellite-borne sensors. The ER-2 will fly its specially built optical simulator that mimics the GLM.

Over a period of 6 weeks, the NASA ER-2 high-altitude jet will fly 100 hours in support of the studies. The flights will be based first from California and then in Georgia, well-timed to coordinate with the tornado field campaign, VORTEX-SE. All the while, the airplane’s downward-looking sensors need to be aimed to match the angle of observation of the satellite-borne sensors. The ER-2 will fly its specially built optical simulator that mimics the GLM.

“That’ll give us optical to optical comparisons,” Goodman noted.

To further check out GLM’s performance, there will also be underpasses from the International Space Station, which now has a TRMM-style lightning detector of its own. “That’s a well-calibrated instrument—we know its performance,” Goodman added.

Meanwhile lower-orbit satellites will gather data from “coincident overpasses” to coordinate with the planes, drones, and ground-based observing systems.

Such field campaigns are a routine follow-up to satellite launches. “Field campaigns are essential for collecting the reference data that can be directly related to satellite observations,” Goodman. He raises a number of examples of uncertainties that can now be cleared up. For example, some flights will pass over Chesapeake Bay, which provides a necessary “dark” watery background: “We didn’t know how stable the satellite platform would be, so there’s concern about jitter for the GLM…so we want to know what happens looking at a bright cloud versus a very dark target in side-by-side pixels.”

Goodman said tests of the new ABI, or Advanced Baseline Imager, involve checking the mirror mechanism that enables north-south scanning. For validation, the project will position a team of students with handheld radiometers in the desert Southwest, but also do a first-time deployment of a radiometer aboard a unmanned aerial system.

The expected capabilities of the ABI, with its 16 spectral channels, are featured in an article by Timothy Schmit and colleagues in the April issue of the Bulletin of the American Meteorological Society.