It hasn’t even been a month since violent, history making tornadoes made headlines across the United States, and yet here we are with another grim tornado record. The death toll from the violent tornado that shredded as much as a third of Joplin, Missouri, Sunday evening reached 116 Monday afternoon. That makes it the single deadliest tornado to strike the United States since NOAA began keeping reliable records of tornado fatalities in 1950. It took the top spot from the Flint, Michigan, twister of June 8, 1953, which killed 115.

The number of dead in Joplin jumped from 89 earlier in the day as news of recoveries as well as rescues were reported. While the number of dead is fully anticipated to increase, news outlets reported that at least five missing families were found buried alive in the rubble, which stretches block after unrecognizable block across six miles of the southwestern Missouri city of 50,000. More than 500 people in Joplin were injured, and the damage is eerily familiar, looking so much like the utter carnage witnessed in Tuscaloosa on April 27, 2011, when 65 died in that day’s twister.

The tornado event attributed to the single highest loss of life on American soil is the “Tri-State” tornado of March 18, 1925, which rampaged across southeastern Missouri, southern Illinois, and into southwestern Indiana. It killed 695 people on its seemingly unending 219-mile journey. But that was prior to our knowledge of families of tornadoes and the cyclical nature of long-lived supercell thunderstorms to form, mature, dissipate, and reform tornadoes, keeping damage paths seemingly continuous.

Prior to the effort by the U.S. Weather Bureau, precursor to the National Weather Service and NOAA, to maintain detailed accounts of tornadoes—and 64 years before yesterday’s event in Joplin—the last single-deadliest tornado in a long list of killer U.S. tornadoes was the 1947 Woodward, Oklahoma, tornado, which claimed 181 lives.

Yesterday there was also one fatality from a destructive tornado that hit Minneapolis, and that and Joplin’s toll combined with last month’s back-to-back tornado outbreaks, plus a handful of earlier tornado deaths this year, brings 2011’s death toll from tornadoes to 482—more than eight times the average of the past 50 years and second (in the modern era) only to the 519 recorded deaths from twisters in 1953. Two-thirds of this year’s fatalities occurred during April 27’s epic tornado outbreak across the South.

The Weather Channel has been providing continuing coverage of the rescue and recovery efforts in Joplin, with one of its crews arriving on scene moments after the tornado. The level of destruction in the city was too much to bear even for one of its seasoned on-air meteorologists. TWC also is reporting along with NOAA’s Storm Prediction Center on the possibility of yet another tornado outbreak, this time in the central Plains on Tuesday.

News

Struggling to Categorize an Epic Tornado Outbreak

The numbers practically defy comprehension: 327 people killed in a single day; nearly that many reports of tornadoes that fateful day, April 27, 2011, when nature went on a spectacular rampage across the South; violent tornadoes in Mississippi, Alabama, Tennessee, and Georgia that generated winds of 180 mph, 190 mph, over 200 mph, ripping bark from trees, pavement from roads, even earth from the ground (video from The Weather Channel explains the scouring), and people from their homes and businesses, in cities and towns small and large; tornadoes that chewed up and spat out lives and belongings, mile after mile, for 132 miles straight in one twister. Having watched similarly violent yet lesser events unfold over the years and decades as warning times have steadily increased, in some cases long enough to jump in your car and drive out of harm’s way, it did not seem possible we’d ever again witness such devastation and widespread death from tornado winds, especially in a nation that has the best warning system for severe weather in the world. As has become painfully clear these last two weeks, however, one has to look way back to 1925—more than an average human lifetime ago—when either a single, marathon twister or a family of vicious vortices known as the Tri-State Tornado thundered across 219 consecutive miles of the Midwest and consumed nearly 700 lives—to find similarly overwhelming tragedy from tornadoes in the United States in a single day.

Annual tornado climatology and statistics for our nation make the historic events of April 27, 2011, even more difficult to believe: 60 people on average lose their lives to tornadoes each year–and for the past decade we’ve considered that number to be excessive; it takes 365 days annually to produce the 1,300 tornadoes we usually have, yet almost a quarter of those may now have happened in just a single 24-hour period (there were 292 reports of tornadoes on April 27, and the NWS has confirmed 143 so far); and fewer than 1% of all tornadoes recorded on our soil over decades ever churn with such violence that they are rated in the top tier of the Fujita tornado intensity scale, regardless of its evolving criteria … yet we had at least three of these most destructive tornadoes strike Mississippi and Alabama on this recent deadly April day.

Stunned weather enthusiasts and meteorologists alike have been searching for more than a week for ways to classify this maelstrom of tornadoes. Some have tried to fit this event into the mold created by the most prolific and extraordinary tornado event in U.S. history—the Super Outbreak of April 3-4, 1974, which in just 18 hours delivered 148 tornadoes that tore up more than 2,500 miles of 13 Midwestern, Southern, and Eastern states and included 30 tornadoes rated violent 4s and 5s at the top end of the original Fujita tornado intensity scale.

Bloggers are beginning to offer comparisons between April 27 and that historic day, nearly four decades ago, especially because the Super Outbreak’s toll of 330 killed is comparable. The event two weeks ago is considered a “textbook” tornado outbreak: “I mean, literally what I learned from a textbook more than 30 years ago,” writes Senior Forecaster Stu Ostro of The Weather Channel (TWC) on his blog. “Not only were the (atmospheric) elements perfect for a tornado outbreak, they were present to an extreme degree,” he notes. It was of the caliber defined by the Palm Sunday outbreak of 1965, the blitzkrieg of twisters across the Ohio Valley on May 29-30, 2004, as well as the Super Outbreak, which Greg Forbes, TWC Severe Weather Expert, says has since become the benchmark for all tornado outbreaks. Indeed, just last year Storm Prediction Center (SPC) tornado and severe weather forecaster Steve Corfidi, et al., published the article “Revisiting the 3–4 April 1974 Super Outbreak of Tornadoes,” in which they state: “The Super Outbreak of tornadoes over the central and eastern United States on 3–4 April 1974 remains the most outstanding severe convective weather episode on record in the continental United States.” What happened on April 27, 2011, however, may have rewritten the tornado textbook, and reset that benchmark, states Jon Davies, a meteorologist and noted Great Plains storm chaser who has been studying severe weather forecasting for 25 years. He wrote in a blog post last week that the setup for tornadoes on April 27, 2011 was “rare and quite extraordinary.” The combination of low-level wind shear and atmospheric instability was “optimum,” creating a “tragic and historic tornado outbreak [that] was unprecedented in the past 75 years of U.S. history, topping even the 1974 ‘Super Outbreak.’”

That comparison is the ongoing debate as the severity of the tornadoes defining the outbreak of April 27 comes into better focus. So far, 14 of its tornadoes have been rated 4 or greater on the Enhanced Fujita (EF) Scale. With more than twice that many in the Super Outbreak, which were rated using the earlier Fujita tornado intensity scale (compare the two scales), it would seem this new event falls short. But Forbes cautions that likening the April 27 tornado outbreak to the Super Outbreak simply using number of violent tornadoes has its pitfalls.

“I was part of the team that developed the EF Scale, and it’s a system that more accurately estimates tornado wind speeds. But it troubled me then (and still does) that it might be hard to compare past tornado outbreaks with future ones and determine which was worst. It’s apples and oranges, to some extent, in the rating systems then and now.”

That’s a statement that could be applied to today’s severe weather forecasting, technological observation, and warnings for severe weather, which have improved significantly in the last 37 years. In his blog post, Recipe for calamity, NCAR meteorologist Bob Henson looks beyond the ingredients that caused the April 27 tornado outbreak and seeks to consider why the human toll of this tragedy was so massive. He mentions that some parts of the South had been hit by severe thunderstorms and damaging winds earlier that day, which knocked out power and may have disrupted communications in areas that tornadoes barreled through later in the afternoon and evening. The speed of the twisters as well as the lack of safe places to hide from such extreme winds also likely fed the outcome. Of note, too, is mention that perhaps the density of the population centers hit by such dangerous winds resulted in a far greater toll than otherwise would have been realized. This insight comes from Roger Edwards, a forecaster and tornado researcher with SPC. Edwards has a long track record of trying to better educate a populace about the realities of severe storms and tornadoes. He blogged about the catastrophe on April 27, 2011, offering this matter-of-fact consideration: “When you have violent, huge tornadoes moving through urban areas, they will cause casualties.” Edwards then follows this statement, in a subsequent post on the April 27 tornado outbreak, with a poignant look at where we seem to be in the inevitable collision of an expanding population with bouts of severe weather:

“I can say fairly safely that a major contributor here clearly was population density. Even though 3 April 1974 affected a few decent sized towns (Xenia, Brandenburg) and the suburbs of one big metro (Sayler Park, for Cincinnati), a greater coverage area of heavy developed land seemed to be in the way of this day’s tornadoes. How much? I’d like to see a land-use comparison. Harold Brooks and Chuck Doswell [current and retired tornado scientists with the National Severe Storms Laboratory, respectively] have discussed the phenomenon of sprawl as a factor in future tornado death risk and the possible nadir of fatalities having been reached…and the future seems to have arrived.”

Viewing this spring’s deadly onslaught of severe weather through that lens makes it all too clear we’ve been here before: recall a late-August Monday on the central Gulf Coast nearly six years ago shattered by an almost unimaginable, hurricane-whipped surge of seawater that drowned coastal communities in southeast Louisiana, Mississippi, and Alabama. Ostro prefers to use the perspective that event gave us when sizing up the April 27 tornado event:

“So that makes this the Katrina of tornado outbreaks, in the sense that it’s a vivid and tragic reminder that although high death tolls from tornadoes and hurricanes are much less common than they were in the 19th and first part of the 20th centuries, we are not immune to them, even in this era of modern meteorological and communication technology.”

A focus on the human toll of the April tornado tragedy might be the facet that really sets this outbreak apart. One of the most remarkable reports came from Jeff Masters’ blog entry of May 5, 2011—eight days after the outbreak. Noting the extreme number of deaths from the tornadoes and how the toll had fluctuated because some victims were counted twice, he wrote: “There are still hundreds of people missing from the tornado, and search teams have not yet made it to all of the towns ravaged by the tornadoes.” Hundreds missing. Even if that number is off by a factor or 10, this outbreak will be historic for its toll in human lives. Almost two weeks since the outbreak, Alabama’s Emergency Management Agency continues to post daily situation reports in which it states that the number of casualties in the state is “to be published at a later time.” The reasons are spelled out vividly in this blog post from The Huntsville Times, which details accounts from survivors of perhaps the day’s most violent tornado, an EF5 with winds estimated at 210 mph that destroyed much of Hackleburg, a town of 1,600 people in northwest Alabama. At least 18 people lost their lives there. Twenty-one-year-old resident Tommy Quinn said only one person died on his street, the Times reports. But six days after the tornado obliterated the area, a neighbor still hadn’t been found. “We can’t even find her house,” Quinn said.

As is evident in this blend of writings and perceptions, the devastatingly deadly tornado outbreak of April 27, 2011, was an epic event, rivaling even the most revered such disaster in the modern era. What these experts and others are finding, though, is that while the April 27 outbreak was indeed exceptional in many ways, and history will likely reveal it to be a meteorological event unprecedented in some aspects, it seems the Super Outbreak of 1974 won’t be stripped of its meteorological prominence, at least not fully. Instead, the tornado outbreak that occurred on April 27, which was truly remarkable, will be referred to in its own way, likely earning a signature nom de guerre through its shockingly violent legacy.

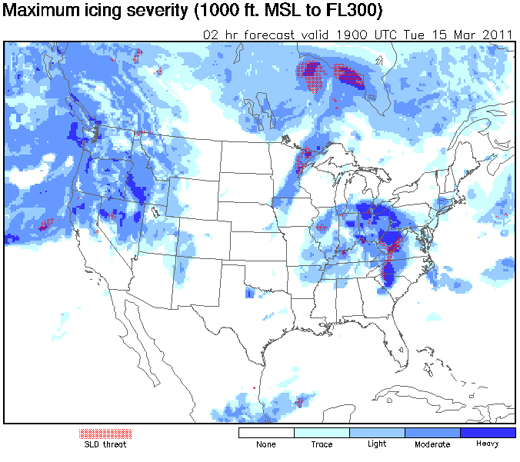

Clearing Up Aviation Ice Forecasting

NCAR has developed a new forecasting system for aircraft icing that delivers 12-hour forecasts updated hourly for air space over the continental United States. The Forecast Icing Product with Severity, or FIP-Severity, measures cloud-top temperatures, atmospheric humidity in vertical columns, and other conditions and then utilizes a fuzzy logic algorithm to identify cloud types and the potential for precipitation in the air, ultimately providing a forecast of both the probability and the severity of icing. The system is an update of a previous NCAR forecast product that only was capable of estimating uncalibrated icing “potential.”

The new icing forecasts should be especially valuable for commuter planes and smaller aircraft, which are more susceptible to icing accidents because they fly at lower altitudes than commercial jets and are often not equipped with de-icing instruments. The new FIP-Severity icing forecasts are available on the NWS Aviation Weather Center’s Aviation Digital Data Service website.

According to NCAR, aviation icing incidents cost the industry approximately $20 million per year, and in recent Congressional testimony, the chairman of the National Transportation Safety Board (NTSB) noted that between 1998 and 2007 there were more than 200 deaths resulting from accidents involving ice on airplanes.

Arctic Ozone Layer Looking Thinner

The WMO announced that observations taken from the ground, weather balloons, and satellites indicate that the stratospheric ozone layer over the Arctic declined by 40% from the beginning of the winter to late March, an unprecedented reduction in the region. Bryan Johnson of NOAA’s Earth System Research Laboratory called the phenomenon “sudden and unusual” and pointed out that it could bring health problems for those in far northern locations such as Iceland and northern Scandinavia and Russia. The WMO noted that the thinning ozone was shifting locations as of late March from over the North Pole to Greenland and Scandinavia, suggesting that ultraviolet radiation levels in those areas will be higher than normal, and the Finnish Meteorological Institute followed with their own announcement that ozone levels over Finland had recently declined by at least 30%.

Previously, the greatest seasonal loss of ozone in the Arctic region was around 30%. Unlike the ozone layer over Antarctica, which thins out consistently each winter and spring, the Arctic’s ozone levels show greater fluctuation from year to year due to more variable weather conditions.

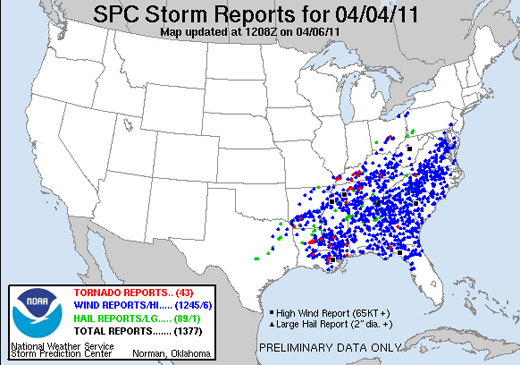

Did Monday's Storm Set a Reporting Record?

A storm system that stretched from the Mississippi River to the mid-Atlantic states on Monday brought more than 1,300 reports of severe weather in a 24-hour period, including 43 tornado events.

The extraordinary number of reports testifies to the intensity of the storm, which has been blamed for at least nine deaths, but it also reflects changes in the way severe weather is reported to–and recorded by–the Storm Prediction Center. As pointed out by Accuweather’s Brian Edwards, the SPC recently removed filters that previously prevented multiple reports of the same event that occurred within 15 miles of each other. Essentially, all reports are now accepted, regardless of their proximity to other reports. Additionally, with ever-improving technology, there are almost certainly more people sending reports from more locations than ever before (and this is especially true in highly populated areas such as much of the area covered by this storm).

So while the intensity of the storm may not set any records, the reporting of it is one for the books. According to the SPC’s Greg Carbin, Monday’s event was one of the three most reported storms on record, rivaled only by events on May 30, 2004 and April 2, 2006.

New Tools for Predicting Tsunamis

The SWASH (Simulating Waves until at Shore) model sounds like something that would have been useful in predicting the tsunami in Japan. According to the developer Marcel Zijlema at Delft University of Technology, it quickly calculates how tall a wave is, how fast it’s moving, and how much energy it holds. Yet, Zijlema admits that unfortunately it wouldn’t have helped in this case. “The quake was 130 kilometers away, too close to the coast, and the wave was moving at 800 kilometers per hour. There was no way to help. But at a greater distance the system could literally save lives.”

SWASH is a development of the SWAN (Simulating Waves Near SHore), which has been around since 1993 and is used by over 1,000 institutions around the world. SWAN calculates wave heights and wave speeds generated by wind and can also analyze waves generated elsewhere by a distant storm. The program can be run on an ordinary computer and the software is free.

According to Zijlema, SWASH works differently than SWAN. Because the model directly simulates the ocean surface, film clips can be generated that help in explaining the underlying physics of currents near the shore and how waves break on shore. This makes the model not only an extremely valuable in an emergency, but also makes it possible to construct effective protection against a tsunami

Like SWAN, SWASH will be available as a public domain program.

Another tool recently developed by seismologists uses multiple seismographic readings from different locations to match earthquakes to the attributes of past tsunami-causing earthquakes. For instance, the algorithm looks for undersea quakes that rupture more slowly, last longer, and are less efficient at radiating energy. These tend to cause bigger ocean waves than fast-slipping subduction quakes that dissipate energy horizontally and deep underground.

The system, known as RTerg, sends an alert within four minutes of a match to NOAA’s Pacific Tsunami Warning Center as well as the United States Geological Survey’s National Earthquake Information Center. “We developed a system that, in real time, successfully identified the magnitude 7.8 2010 Sumatran earthquake as a rare and destructive tsunami earthquake,” says Andrew Newman, assistant professor in the School of Earth and Atmospheric Sciences. “Using this system, we could in the future warn local populations, thus minimizing the death toll from tsunamis.”

Newman and his team are working on ways to improve RTerg in order to add critical minutes between the time of the earthquake and warning. They’re also planning to rewrite the algorithm to broaden its use to all U.S. and international warning centers.

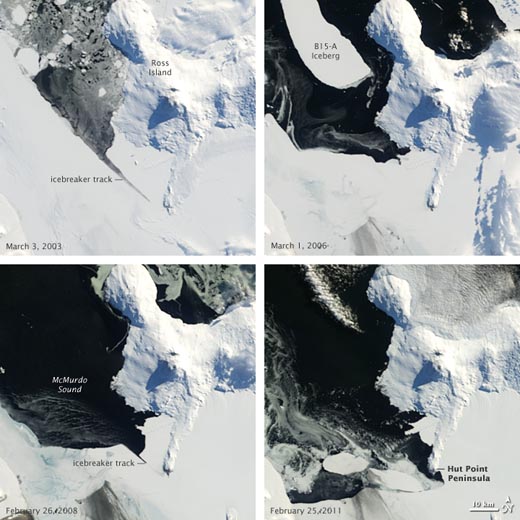

The Evolution of Melting Ice

Off the coast of Antarctica, beyond the McMurdo Station research center at the southwestern tip of Ross Island, lies Hut Point, where in 1902 Robert Falcon Scott and his crew established a base camp for their Discovery Expedition. Scott’s ship, the Discovery, would soon thereafter become encased in ice at Hut Point, and would remain there until the ice broke up two years later.

Given recent events, it appears that Scott (and his ship) could have had it much worse. Sea levels in McMurdo Sound off the southwestern coast of Ross Island have recently reached their lowest levels since 1998, and last month, the area around the tip of Hut Point became free of ice for the first time in more than 10 years. The pictures below, taken by the Moderate Resolution Imaging Spectroradiometer on NASA’s Terra satellite and made public by NASA’s Earth Observatory, show the progression of the ice melt in the Sound dating back to 2003. The upper-right image shows a chunk of the B-15 iceberg, which when whole exerted a significant influence on local ocean and wind currents and on sea ice in the Sound. After the iceberg broke into pieces, warmer currents gradually dissipated the ice in the Sound; the image at the lower right, taken on February 25, shows open water around Hut Point.

Of course, icebreakers (their tracks are visible in both of the left-hand photos) can now prevent ships from being trapped and research parties from being stranded. Scott could have used that kind of help in 1902…

Lessons of Sendai: The Need for Community Resilience

by William Hooke, AMS Policy Program Director, adapted from two posts (here and there) for the AMS Project, Living on the Real World

Events unfolding in and around Sendai – indeed, across the whole of Japan – are tragic beyond describing. More than 10,000 are thought to be dead, and the toll continues to rise. Economists estimate the losses at some $180B, or more than 3% of GDP. This figure is climbing as well. The images are profoundly moving. Most of us can only guess at the magnitude of the suffering on the scene. Dozens of aftershocks, each as strong as the recent Christchurch earthquake or stronger, have pounded the region. At least one volcanic eruption is underway nearby.

What are the lessons in Sendai for the rest of us? Many will emerge over the days and weeks ahead. Most of these will deal with particulars: for example, a big piece of the concern is for the nuclear plants we have here. Are they located on or near fault zones or coastlines? Well, yes, in some instances. Are the containment vessels weak or is the facility aging, just as in Japan? Again, yes. So they’re coming under scrutiny. But the effect of the tsunami itself on coastal communities? We’re shrugging our shoulders.

It’s reminiscent of those nature films, You know the ones I’m talking about. We watch fascinated as the wildebeests cross the rivers, where the crocodiles lie in wait to bring down one of the aging or weak. A few minutes of commotion, and then the gnus who’ve made it with their calves to the other side return to business-as-usual. They’ll cross that same river en masse next year, same time, playing Russian roulette with the crocs.

It should be obvious from Sendai, or Katrina, or this past summer’s flooding in Pakistan, or the recent earthquakes in Haiti or Chile, that what we often call recovery isn’t really that at all. Often the people in the directly affected area don’t recover, do they? The dead aren’t revived. The injured don’t always fully mend. Those who suffer loss aren’t really made whole. When we talk about “resilience” we instead must talk at the larger scale of a community that has been struck a glancing blow. Think of resilience as “healing.” A soldier loses a limb in combat. He’s resilient, and recovers. A cancer patient loses one or more organs. She’s resilient, and recovers.

What happens is that the rest of us–the rest of the herd–eventually are able to move on as if nothing as happened. Nonetheless, if we spent as much energy focusing on the lessons from Sendai as we spend on repressing that sense of identification or foreboding, we’d be demonstrably better off.

The reality is that resilience to hazards is at its core a community matter, not a global one. The risks often tend to be locally specific. It’s the local residents who know best the risks and vulnerabilities, who see the fragile state of their regional economy and remember what happened the last time drought destroyed their crops, and on and on.

Similarly, the benefits of building and maintaining resilience are largely local as well, so let’s get real about protecting our communities against future threats. Leaders and residents of every community in the United States, after watching the news coverage of Sendai in the evenings, might be motivated to spend a few hours the morning following building community disaster resilience through private-public collaboration.

What a coincidence! There’s actually a National Academies Natural Research Council report by that same name. It gives a framework for private-public collaborations, and some guidelines for how to make such collaborations effective.

Some years ago, Fran Norris and her colleagues at Dartmouth Medical School wrote a paper that has become something of a classic in hazards literature. The reason? They introduced the notion of community resilience, defining it largely by building upon the value of collaboration:

Community resilience emerges from four primary sets of adaptive capacities–Economic Development, Social Capital, Information and Communication, and Community Competence–that together provide a strategy for disaster readiness. To build collective resilience, communities must reduce risk and resource inequities, engage local people in mitigation, create organizational linkages, boost and protect social supports, and plan for not having a plan, which requires flexibility, decision-making skills, and trusted sources of information that function in the face of unknowns.”

Here’s some more material on the same general idea, taken from a website called learningforsustainability.net:

Resilient communities are capable of bouncing back from adverse situations. They can do this by actively influencing and preparing for economic, social and environmental change. When times are bad they can call upon the myriad of resouces [sic]that make them a healthy community. A high level of social capital means that they have access to good information and communication networks in times of difficulty, and can call upon a wide range of resources.

Taking the texts pretty much at face value, as opposed to a more professional evaluation, do you recognize “resilience” in the events of the past week in this framing?

Maybe yes-and-no. No…if you zoom in and look at the individual small towns and neighborhoods entirely obliterated by the tsunami, or if you look at the Fukushima nuclear plant in isolation. They’re through. Finished. Other communities, and other electrical generating plants may come in and take their place. They may take the same names. But they’ll really be entirely different, won’t they? To call that recovery won’t really honor or fully respect those who lost their lives in the flood and its aftermath.

To see the resilience in community terms, you have to zoom out, step back quite a ways, don’t you? The smallest community you might consider? That might have be the nation of Japan in its entirety. And even at that national scale the picture is mixed. Marcus Noland wrote a nice analytical piece on this in the Washington Post. He notes that after a period of economic ascendancy in the 1980s, Japan has been struggling for the two decades with a stagnating economy, an aging demographic, and dysfunctional political leadership. He notes the opportunity to jump start the country into a much more vigorous 21st century role. We’re not weeks or months from seeing how things play out; it’ll take weeks just to stabilize the nuclear reactors, and decades to sort out the longer-term implications.

In a sense, even with this event, you might have to zoom out still further. Certainly the global financial sector, that same sector that suffered its own version of a reactor meltdown in 2008, is still nervously jangled. A globalized economy is trying to sort out just which bits are sensitive to the disruption of the Japanese supply chain, and how those sensitivities will ripple across the world. Just as the tsunami reached our shores, so have the economic impacts.

This is happening more frequently these days. The most recent Eyjafjallajokull volcanic eruption, unlike its predecessors, disrupted much of the commerce of Europe and Africa. In prior centuries, news of the eruption would have made its way around the world at the speed of sailing ships, and the impacts would have been confined to Iceland proper. Hurricane Katrina caused gasoline prices to spike throughout the United States, not just the Louisiana region. And international grain markets were unsettled for some time as well, until it was clear that the Port of New Orleans was fully functional. The “recovery” of New Orleans? That’s a twenty-year work-in-progress.

And go back just a little further, to September 11, 2001. In the decade since, would you say that the United States functioned as a resilient community, according to the above criteria? Have we really bounced back? Or have we instead struggled mightily with “build(ing) collective resilience, communities … reduc(ing) risk and resource inequities, engag(ing) local people in mitigation, creat(ing) organizational linkages, boost(ing) and protect(ing) social supports, and plan(ning) for not having a plan, which requires flexibility, decision-making skills, and trusted sources of information that function in the face of unknowns.”

Sometimes it seems that 9-11 either made us brittle, or revealed a pre-existing brittleness we hadn’t yet noticed…and that we’re still, as a nation, undergoing a painful rehab.

All this matters because such events seem to be on the rise – in terms of impact, and in terms of frequency. They’re occurring on nature’s schedule, not ours. They’re not waiting until we’ve recovered from some previous horror, but rather are piling one on top of another. The hazards community used to refer to these as “cascading disasters.”

Somehow the term seems a little tame today.

The Atmospheric Factor in Nuclear Disaster

The tense nuclear power plant situation in Japan after the recent earthquake has raised questions not only about safety for the calamity-stricken Japanese but also about the possibility that a release of radioactive gas might affect countries far away.

Such questions would seem natural for the U.S. West Coast, where aerosols from Asia have been detected in recent years, creating a media stir about potential health risks. Nonetheless, the risks from a nuclear catastrophe in Japan are considered very, very low. Yesterday the San Francisco public radio station KQED aired an interview with atmospheric scientist Tony VanCuren of the California Air Resources Board about how a worst-case scenario would have to develop.

VanCuren emphasized that until an actual release is observed and measured, it’s very difficult to quantify risks, but he also made a few cautious speculative points:

It depends upon the meteorology when the release occurs. If the stuff were caught up in rain then it would be rained into the ocean and most of the risk would be dissipated before it could make it across the Pacific. If it were released in a dry air mass that was headed this way then more of it could make it across the Pacific.

VanCuren says that such transport would not happen with just any release of radioactive gas. There has to be a lot of push from a fire or other heat source, similar to what happened in Chernobyl in 1986:

If there were a very energetic release–either a very large steam explosion or something like that that could push material high into the atmosphere…a mile to three miles up in the atmosphere–then the potential for transport would become quite significant. It would still be quite diluted as it crossed the Pacific and large particles would fall out due to gravity in the trip across the Pacific. So what would be left would be relatively small particles five microns or less in diameter and they would be spread out over a very wide plume by the time it arrived in North America.

VanCuren noted that the difference between the older graphite-moderated Chernobyl reactor and the reactors in Japan makes such releases less likely now, and that findings from Chernobyl showed that the main health risks were confined to the immediate area around the reactor.

Japanese scientists were among those who took the lead after the 1986 Chernobyl release to simulate the long-range atmospheric transport of radiation. A modeling study in the Journal of Applied Meteorology by Hirohiko Ishikawa suggested that the westward and southward spread of low concentrations of radioactive particles found in Europe may have been sustained by resuspension of those particles back into the atmosphere.

Such findings recall the tail end of a whole different era in meteorology.

Decades ago, the tracking of explosively released radioactive particles in the atmosphere was a major topic in meteorology–see for example, this Journal of Meteorology paper (“Airborne Measurement of Atomic Particles”) that Les Machta et al. based on bomb tests in Nevada in 1956. (In 1992 in BAMS Machta later told the story of how meteorological trajectory analysis helped scientists identify the date and place of the first Soviet nuclear tests.)

One finds papers in the AMS archive about fallout dispersion, atmospheric waves, and other effects of nuclear explosions. Of course, radioactive particles in the atmosphere were not only of interest for health and national security reasons. These particles were excellent tracers for studies of then-poorly understood atmospheric properties like jet-stream and tropopause dynamics.

In 1955 there was even a paper in Monthly Weather Review by D. Lee Harris refuting a then-popular notion that rising counts of tornadoes in the United States were caused by nuclear weapons tests. However, since 1963 most nations have pledged to ban atmospheric, underwater, and space-based nuclear weapons tests, stemming the flow of research projects (but not the inexorable rise of improving tornado counts).

While the initial flurry of meteorological work spurred by the Atomic Age inevitably slowed down (a spate of Nuclear Winter questions aside), the earthquake and tsunami in Japan will undoubtedly shake loose new demands on geophysical scientists, and maybe dredge up a few old topics as well.

Here Comes the Sun–All 360 Degrees

The understanding and forecasting of space weather could take great steps forward with the help of NASA’s Solar Terrestrial Relations Observatory (STEREO) mission, which recently captured the first-ever images taken simultaneously from opposite sides of the sun. NASA launched two STEREO probes in October of 2006, and on February 6 they finally reached their positions 180 degrees apart from each other, where they could each photograph half of the sun. The STEREO probes are tuned to wavelengths of extreme ultraviolet radiation that will allow them to monitor such solar activity as sunspots, flares, tsunamis, and magnetic filaments, and the probes’ positioning means that this activity will never be hidden, so storms originating from the far side of the sun will no longer be a surprise. The 360-degree views will also facilitate the study of other solar phenomenon, such as the possibility that solar eruptions on opposite sides of the sun can gain intensity by feeding off each other. The NASA clip below includes video of the historic 360-degree view.