A Presidential Session Spotlight from the AMS 104th Annual Meeting

By Katie Pflaumer, AMS Staff

Significantly reducing greenhouse gas emissions requires transitioning primarily to carbon-free sources for energy generation, but many challenges stand in the way. What are these challenges, and how can the weather, water, and climate sector help meet them?

A Presidential Session at the 104th AMS Annual Meeting addressed those questions with panelists Debbie Lew (Executive Director at ESIG, the Energy Systems Integration Group), Alexander “Sandy” MacDonald (former AMS President and former director of the NOAA Earth Systems Research Laboratory), Aidan Tuohy (Director of Transmission Operations and Planning at EPRI, the Electric Power Research Institute), and Justin Sharp (then Owner and Principal of Sharply Focused, now Technical Leader in the Transmission and Operations Planning team at EPRI). Here are some key points that arose from the session, titled, “Transition to Carbon-Free Energy Generation,” introduced by NSF NCAR’s Jared Lee, and moderated by MESO, Inc.’s John Zack.

Key Points

- Decarbonizing the electric grid is key to reducing U.S. greenhouse gas emissions.

- Wind and solar are now the cheapest forms of energy generation; adoption is increasing, but not fast enough to catch up with the likely growth in demand.

- Energy demand is rapidly increasing, driven by the expansion of data centers, AI applications, crypto mining, and the electrification of transportation and heating. Hydrogen production might greatly increase future loads.

- “Massive buildouts” of both renewable energy plants AND transmission infrastructure are required to reduce emissions.

- A reliable and affordable power system with large shares of wind and solar generation requires accurate historical weather information to inform infrastructure buildout, and accurate forecasts to support operations.

- To avoid expensive infrastructure that’s only used during peak times, electricity pricing must incentivize consumers to avoid excessive use during periods of high demand. This requires accurate weather forecasting.

- Connecting the three main national grids together into a “supergrid” could improve transmission and grid flexibility, significantly reducing emissions.

The need for carbon-free energy is urgent

Greenhouse gas emissions are still increasing sharply. In response, global temperatures are rising faster than even the most pessimistic models would have predicted a few decades ago, noted Lee in his introductory remarks to the panel. The U.S. is the second largest global carbon emitter, despite having a much smaller population than the other top emitters, China and India.

If we don’t solve the greenhouse gas problem by mid-century, warned MacDonald, we will soon hit 700 ppm of carbon dioxide in the atmosphere. If that happens, “We’re back to the Miocene era,” he said, referencing an exceptionally hot period around 12.5 million years ago. “Northern Hemisphere land temperatures will be 11 degrees Fahrenheit warmer. Arctic temps will be 17°F warmer, which is probably going to launch a huge permafrost thaw … The ocean will be 80% more acidic. So we are in an urgent situation.”

What’s the path to a more sustainable future? Decarbonizing the grid.

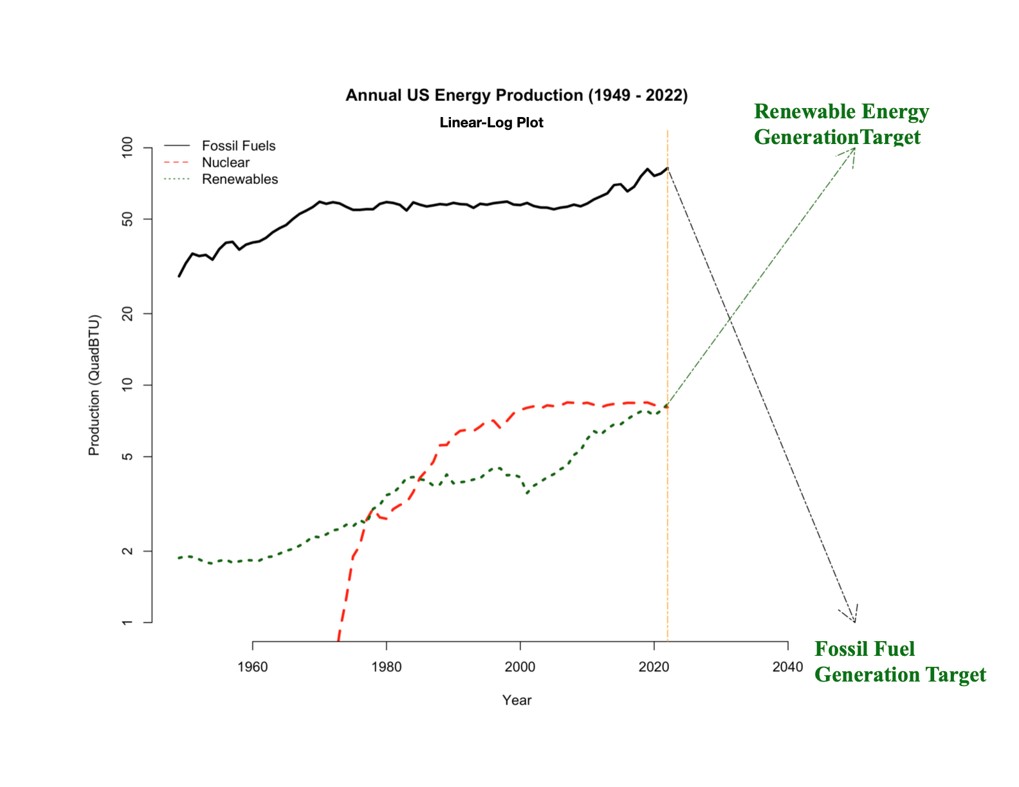

The energy sector is one of the top sources of U.S. emissions—and reducing emissions there will have knock-on effects in buildings and transportation. Lee noted that wind and solar power have dropped dramatically in price, becoming the cheapest forms of energy generation available. This has led to an increase in adoption: renewables are now second only to natural gas in terms of electrical power generated in the United States. Yet natural gas is still growing fast, and still far exceeds the use of renewables.

Therefore, Lew said in her talk, we need “massive buildouts of [wind, solar, and battery] resources … doubling or even tripling the amount of installed capacity. We’re going to be electrifying buildings, transportation, industry [and] massively building out transmission and distribution networks … And we’re going to be using fossil fuel generators for reliability needs.” Doing this could get us to 80-90% fossil-free energy production.

Bridging the gap

But what about that last 10–20%?

“We need some kind of cost-effective, clean, firm resource” to fill in the gaps and act as a bridge fuel—a resource that’s available 24/7 no matter the weather or season—said Lew. This resource might end up being hydrogen, advanced nuclear energy, or even green bioenergy with carbon capture and sequestration to offset emissions from natural gas. “We need all options on the table.”

Weather? Or not?

Trying to transition to renewables without incorporating reliability and resilience will lead to blackouts and power outages, Tuohy noted. These would have major economic consequences and reduce the political viability of renewables, as well as leading to unjust allocation of energy.

A resilient grid, he said, requires enough energy production to meet future demand; adequate transmission and delivery infrastructure to meet future needs and to balance supply with demand moment-to-moment every day; reliability despite constant shifts in energy production; and the ability to prevent a problem in one place from causing cascading outages across the system.

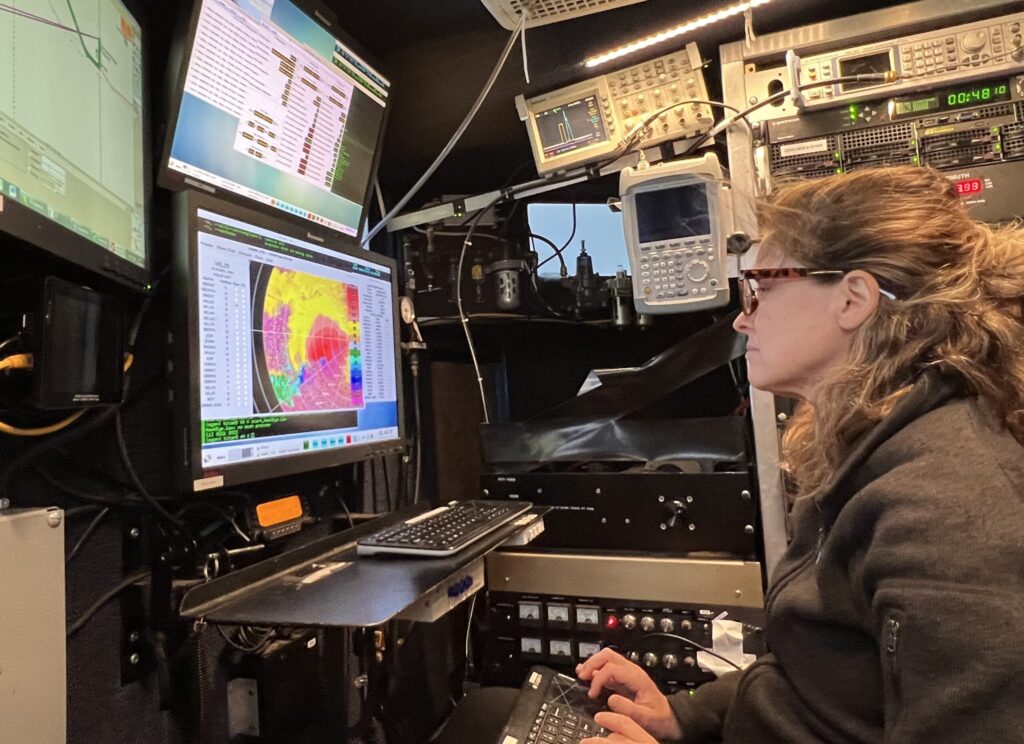

Making a new, wind- and solar-dependent grid truly work means balancing—and forecasting—energy availability and demand across the nation, accounting for the current and predicted weather at each solar and wind energy site, as well as how climate change will affect resource availability. This means a massive meteorological infrastructure must be created.

Read our upcoming post from Justin Sharp to learn more about how weather and renewable energy must work together.

“[This is] an operational need, not a research project … There’s an imperative to have dedicated, accurate, and expertly curated weather information to support the energy transition.”

—Justin Sharp

Uncertainty

Demands on the grid are now subject to extreme variability, not just from weather and climate, Tuohy said. For example, demand projections from 2022 versus 2023 were radically different because of new energy-intensive data centers coming online.

“We’ve gone from a kind of deterministic system — [in which we] had good sense of, our peak demand’s going to happen in July—to a far more stochastic and variable type, both on the demand and the supply side,” said Tuohy. We have a lot of data and computational tools, but we must be able to bring those datasets together effectively so we can analyze and predict change. “We need to … develop tools that account for [uncertainty].”

Changing behavior

The infrastructure required for the necessary expansion of renewable energy generation will be expensive. Keeping the cost manageable means not wasting money to build extra infrastructure that’s only useful during times of peak demand. That means we need to avoid high peaks in energy use.

We know that people can be a lot more conscientious about energy consumption if they think it will save them money. Yet many consumers are currently sheltered from the financial consequences of overloading the grid. “There’s tremendous flexibility in load if you … expose consumers to better price signals,” Lew said.

Consumers could be financially incentivized, for example, to choose off-peak times to turn on a heater or charge an electric vehicle. Such programs should be carefully designed to minimize negative impacts on vulnerable consumers, but the fact remains that to keep those consumers safe, the climate crisis must be confronted.

Supergrid to the rescue?

The main problem with a renewable energy grid, the speakers acknowledged, is transmission—both connecting new generators and moving energy based on supply and demand. “You’ve got to be able to move wind and solar energy around at continental scales,” said MacDonald. A study by ESIG suggested that simply adding a 2-gigawatt transmission line connecting the Texas power grid with the Eastern U.S. power grid would effectively act like 4 GW of extra electricity generating capacity across the two regions, because their grids experience risk and stress at different, complementary times.

A 2016 paper MacDonald and colleagues published in Nature Climate Change suggests that U.S. electricity-sector carbon emissions could be decreased by 80% — with current technology and without increased electricity costs — if the United States can implement a “supergrid.” That means connecting all three major electrical grids currently serving the continental United States. When it’s sunny in San Jose and snowing in Cincinnati, you could transmit solar-produced energy to keep Ohio homes warm, rather than generating extra power locally.

It will take a lot of effort, but “if we [start implementing a supergrid] now, in a 40-year transition, we can preserve the environment we have,” MacDonald said. “If we wait until the 2040s, we are basically going to devastate the planet’s life for thousands of years.”

You can view all the AMS 104th Annual Meeting presentations online. Watch this Presidential Session.

Photo at top: Harry Cunningham on Pexels (@harry.digital)