The WMO announced that observations taken from the ground, weather balloons, and satellites indicate that the stratospheric ozone layer over the Arctic declined by 40% from the beginning of the winter to late March, an unprecedented reduction in the region. Bryan Johnson of NOAA’s Earth System Research Laboratory called the phenomenon “sudden and unusual” and pointed out that it could bring health problems for those in far northern locations such as Iceland and northern Scandinavia and Russia. The WMO noted that the thinning ozone was shifting locations as of late March from over the North Pole to Greenland and Scandinavia, suggesting that ultraviolet radiation levels in those areas will be higher than normal, and the Finnish Meteorological Institute followed with their own announcement that ozone levels over Finland had recently declined by at least 30%.

Previously, the greatest seasonal loss of ozone in the Arctic region was around 30%. Unlike the ozone layer over Antarctica, which thins out consistently each winter and spring, the Arctic’s ozone levels show greater fluctuation from year to year due to more variable weather conditions.

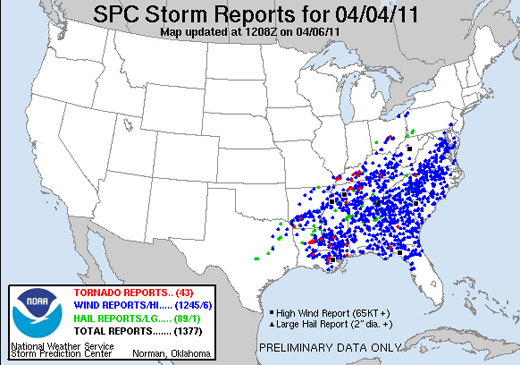

Did Monday's Storm Set a Reporting Record?

A storm system that stretched from the Mississippi River to the mid-Atlantic states on Monday brought more than 1,300 reports of severe weather in a 24-hour period, including 43 tornado events.

The extraordinary number of reports testifies to the intensity of the storm, which has been blamed for at least nine deaths, but it also reflects changes in the way severe weather is reported to–and recorded by–the Storm Prediction Center. As pointed out by Accuweather’s Brian Edwards, the SPC recently removed filters that previously prevented multiple reports of the same event that occurred within 15 miles of each other. Essentially, all reports are now accepted, regardless of their proximity to other reports. Additionally, with ever-improving technology, there are almost certainly more people sending reports from more locations than ever before (and this is especially true in highly populated areas such as much of the area covered by this storm).

So while the intensity of the storm may not set any records, the reporting of it is one for the books. According to the SPC’s Greg Carbin, Monday’s event was one of the three most reported storms on record, rivaled only by events on May 30, 2004 and April 2, 2006.

New Tools for Predicting Tsunamis

The SWASH (Simulating Waves until at Shore) model sounds like something that would have been useful in predicting the tsunami in Japan. According to the developer Marcel Zijlema at Delft University of Technology, it quickly calculates how tall a wave is, how fast it’s moving, and how much energy it holds. Yet, Zijlema admits that unfortunately it wouldn’t have helped in this case. “The quake was 130 kilometers away, too close to the coast, and the wave was moving at 800 kilometers per hour. There was no way to help. But at a greater distance the system could literally save lives.”

SWASH is a development of the SWAN (Simulating Waves Near SHore), which has been around since 1993 and is used by over 1,000 institutions around the world. SWAN calculates wave heights and wave speeds generated by wind and can also analyze waves generated elsewhere by a distant storm. The program can be run on an ordinary computer and the software is free.

According to Zijlema, SWASH works differently than SWAN. Because the model directly simulates the ocean surface, film clips can be generated that help in explaining the underlying physics of currents near the shore and how waves break on shore. This makes the model not only an extremely valuable in an emergency, but also makes it possible to construct effective protection against a tsunami

Like SWAN, SWASH will be available as a public domain program.

Another tool recently developed by seismologists uses multiple seismographic readings from different locations to match earthquakes to the attributes of past tsunami-causing earthquakes. For instance, the algorithm looks for undersea quakes that rupture more slowly, last longer, and are less efficient at radiating energy. These tend to cause bigger ocean waves than fast-slipping subduction quakes that dissipate energy horizontally and deep underground.

The system, known as RTerg, sends an alert within four minutes of a match to NOAA’s Pacific Tsunami Warning Center as well as the United States Geological Survey’s National Earthquake Information Center. “We developed a system that, in real time, successfully identified the magnitude 7.8 2010 Sumatran earthquake as a rare and destructive tsunami earthquake,” says Andrew Newman, assistant professor in the School of Earth and Atmospheric Sciences. “Using this system, we could in the future warn local populations, thus minimizing the death toll from tsunamis.”

Newman and his team are working on ways to improve RTerg in order to add critical minutes between the time of the earthquake and warning. They’re also planning to rewrite the algorithm to broaden its use to all U.S. and international warning centers.

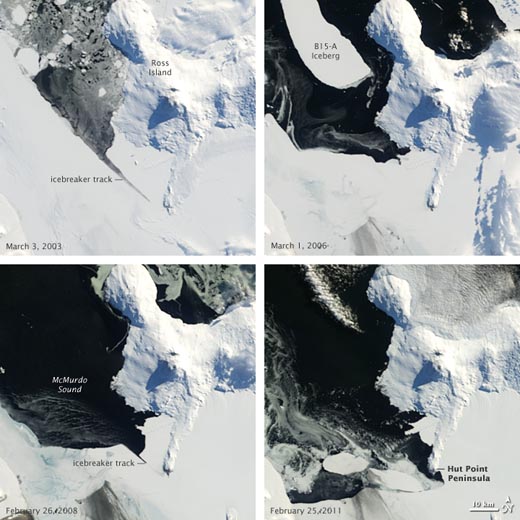

The Evolution of Melting Ice

Off the coast of Antarctica, beyond the McMurdo Station research center at the southwestern tip of Ross Island, lies Hut Point, where in 1902 Robert Falcon Scott and his crew established a base camp for their Discovery Expedition. Scott’s ship, the Discovery, would soon thereafter become encased in ice at Hut Point, and would remain there until the ice broke up two years later.

Given recent events, it appears that Scott (and his ship) could have had it much worse. Sea levels in McMurdo Sound off the southwestern coast of Ross Island have recently reached their lowest levels since 1998, and last month, the area around the tip of Hut Point became free of ice for the first time in more than 10 years. The pictures below, taken by the Moderate Resolution Imaging Spectroradiometer on NASA’s Terra satellite and made public by NASA’s Earth Observatory, show the progression of the ice melt in the Sound dating back to 2003. The upper-right image shows a chunk of the B-15 iceberg, which when whole exerted a significant influence on local ocean and wind currents and on sea ice in the Sound. After the iceberg broke into pieces, warmer currents gradually dissipated the ice in the Sound; the image at the lower right, taken on February 25, shows open water around Hut Point.

Of course, icebreakers (their tracks are visible in both of the left-hand photos) can now prevent ships from being trapped and research parties from being stranded. Scott could have used that kind of help in 1902…

Lessons of Sendai: The Need for Community Resilience

by William Hooke, AMS Policy Program Director, adapted from two posts (here and there) for the AMS Project, Living on the Real World

Events unfolding in and around Sendai – indeed, across the whole of Japan – are tragic beyond describing. More than 10,000 are thought to be dead, and the toll continues to rise. Economists estimate the losses at some $180B, or more than 3% of GDP. This figure is climbing as well. The images are profoundly moving. Most of us can only guess at the magnitude of the suffering on the scene. Dozens of aftershocks, each as strong as the recent Christchurch earthquake or stronger, have pounded the region. At least one volcanic eruption is underway nearby.

What are the lessons in Sendai for the rest of us? Many will emerge over the days and weeks ahead. Most of these will deal with particulars: for example, a big piece of the concern is for the nuclear plants we have here. Are they located on or near fault zones or coastlines? Well, yes, in some instances. Are the containment vessels weak or is the facility aging, just as in Japan? Again, yes. So they’re coming under scrutiny. But the effect of the tsunami itself on coastal communities? We’re shrugging our shoulders.

It’s reminiscent of those nature films, You know the ones I’m talking about. We watch fascinated as the wildebeests cross the rivers, where the crocodiles lie in wait to bring down one of the aging or weak. A few minutes of commotion, and then the gnus who’ve made it with their calves to the other side return to business-as-usual. They’ll cross that same river en masse next year, same time, playing Russian roulette with the crocs.

It should be obvious from Sendai, or Katrina, or this past summer’s flooding in Pakistan, or the recent earthquakes in Haiti or Chile, that what we often call recovery isn’t really that at all. Often the people in the directly affected area don’t recover, do they? The dead aren’t revived. The injured don’t always fully mend. Those who suffer loss aren’t really made whole. When we talk about “resilience” we instead must talk at the larger scale of a community that has been struck a glancing blow. Think of resilience as “healing.” A soldier loses a limb in combat. He’s resilient, and recovers. A cancer patient loses one or more organs. She’s resilient, and recovers.

What happens is that the rest of us–the rest of the herd–eventually are able to move on as if nothing as happened. Nonetheless, if we spent as much energy focusing on the lessons from Sendai as we spend on repressing that sense of identification or foreboding, we’d be demonstrably better off.

The reality is that resilience to hazards is at its core a community matter, not a global one. The risks often tend to be locally specific. It’s the local residents who know best the risks and vulnerabilities, who see the fragile state of their regional economy and remember what happened the last time drought destroyed their crops, and on and on.

Similarly, the benefits of building and maintaining resilience are largely local as well, so let’s get real about protecting our communities against future threats. Leaders and residents of every community in the United States, after watching the news coverage of Sendai in the evenings, might be motivated to spend a few hours the morning following building community disaster resilience through private-public collaboration.

What a coincidence! There’s actually a National Academies Natural Research Council report by that same name. It gives a framework for private-public collaborations, and some guidelines for how to make such collaborations effective.

Some years ago, Fran Norris and her colleagues at Dartmouth Medical School wrote a paper that has become something of a classic in hazards literature. The reason? They introduced the notion of community resilience, defining it largely by building upon the value of collaboration:

Community resilience emerges from four primary sets of adaptive capacities–Economic Development, Social Capital, Information and Communication, and Community Competence–that together provide a strategy for disaster readiness. To build collective resilience, communities must reduce risk and resource inequities, engage local people in mitigation, create organizational linkages, boost and protect social supports, and plan for not having a plan, which requires flexibility, decision-making skills, and trusted sources of information that function in the face of unknowns.”

Here’s some more material on the same general idea, taken from a website called learningforsustainability.net:

Resilient communities are capable of bouncing back from adverse situations. They can do this by actively influencing and preparing for economic, social and environmental change. When times are bad they can call upon the myriad of resouces [sic]that make them a healthy community. A high level of social capital means that they have access to good information and communication networks in times of difficulty, and can call upon a wide range of resources.

Taking the texts pretty much at face value, as opposed to a more professional evaluation, do you recognize “resilience” in the events of the past week in this framing?

Maybe yes-and-no. No…if you zoom in and look at the individual small towns and neighborhoods entirely obliterated by the tsunami, or if you look at the Fukushima nuclear plant in isolation. They’re through. Finished. Other communities, and other electrical generating plants may come in and take their place. They may take the same names. But they’ll really be entirely different, won’t they? To call that recovery won’t really honor or fully respect those who lost their lives in the flood and its aftermath.

To see the resilience in community terms, you have to zoom out, step back quite a ways, don’t you? The smallest community you might consider? That might have be the nation of Japan in its entirety. And even at that national scale the picture is mixed. Marcus Noland wrote a nice analytical piece on this in the Washington Post. He notes that after a period of economic ascendancy in the 1980s, Japan has been struggling for the two decades with a stagnating economy, an aging demographic, and dysfunctional political leadership. He notes the opportunity to jump start the country into a much more vigorous 21st century role. We’re not weeks or months from seeing how things play out; it’ll take weeks just to stabilize the nuclear reactors, and decades to sort out the longer-term implications.

In a sense, even with this event, you might have to zoom out still further. Certainly the global financial sector, that same sector that suffered its own version of a reactor meltdown in 2008, is still nervously jangled. A globalized economy is trying to sort out just which bits are sensitive to the disruption of the Japanese supply chain, and how those sensitivities will ripple across the world. Just as the tsunami reached our shores, so have the economic impacts.

This is happening more frequently these days. The most recent Eyjafjallajokull volcanic eruption, unlike its predecessors, disrupted much of the commerce of Europe and Africa. In prior centuries, news of the eruption would have made its way around the world at the speed of sailing ships, and the impacts would have been confined to Iceland proper. Hurricane Katrina caused gasoline prices to spike throughout the United States, not just the Louisiana region. And international grain markets were unsettled for some time as well, until it was clear that the Port of New Orleans was fully functional. The “recovery” of New Orleans? That’s a twenty-year work-in-progress.

And go back just a little further, to September 11, 2001. In the decade since, would you say that the United States functioned as a resilient community, according to the above criteria? Have we really bounced back? Or have we instead struggled mightily with “build(ing) collective resilience, communities … reduc(ing) risk and resource inequities, engag(ing) local people in mitigation, creat(ing) organizational linkages, boost(ing) and protect(ing) social supports, and plan(ning) for not having a plan, which requires flexibility, decision-making skills, and trusted sources of information that function in the face of unknowns.”

Sometimes it seems that 9-11 either made us brittle, or revealed a pre-existing brittleness we hadn’t yet noticed…and that we’re still, as a nation, undergoing a painful rehab.

All this matters because such events seem to be on the rise – in terms of impact, and in terms of frequency. They’re occurring on nature’s schedule, not ours. They’re not waiting until we’ve recovered from some previous horror, but rather are piling one on top of another. The hazards community used to refer to these as “cascading disasters.”

Somehow the term seems a little tame today.

The Atmospheric Factor in Nuclear Disaster

The tense nuclear power plant situation in Japan after the recent earthquake has raised questions not only about safety for the calamity-stricken Japanese but also about the possibility that a release of radioactive gas might affect countries far away.

Such questions would seem natural for the U.S. West Coast, where aerosols from Asia have been detected in recent years, creating a media stir about potential health risks. Nonetheless, the risks from a nuclear catastrophe in Japan are considered very, very low. Yesterday the San Francisco public radio station KQED aired an interview with atmospheric scientist Tony VanCuren of the California Air Resources Board about how a worst-case scenario would have to develop.

VanCuren emphasized that until an actual release is observed and measured, it’s very difficult to quantify risks, but he also made a few cautious speculative points:

It depends upon the meteorology when the release occurs. If the stuff were caught up in rain then it would be rained into the ocean and most of the risk would be dissipated before it could make it across the Pacific. If it were released in a dry air mass that was headed this way then more of it could make it across the Pacific.

VanCuren says that such transport would not happen with just any release of radioactive gas. There has to be a lot of push from a fire or other heat source, similar to what happened in Chernobyl in 1986:

If there were a very energetic release–either a very large steam explosion or something like that that could push material high into the atmosphere…a mile to three miles up in the atmosphere–then the potential for transport would become quite significant. It would still be quite diluted as it crossed the Pacific and large particles would fall out due to gravity in the trip across the Pacific. So what would be left would be relatively small particles five microns or less in diameter and they would be spread out over a very wide plume by the time it arrived in North America.

VanCuren noted that the difference between the older graphite-moderated Chernobyl reactor and the reactors in Japan makes such releases less likely now, and that findings from Chernobyl showed that the main health risks were confined to the immediate area around the reactor.

Japanese scientists were among those who took the lead after the 1986 Chernobyl release to simulate the long-range atmospheric transport of radiation. A modeling study in the Journal of Applied Meteorology by Hirohiko Ishikawa suggested that the westward and southward spread of low concentrations of radioactive particles found in Europe may have been sustained by resuspension of those particles back into the atmosphere.

Such findings recall the tail end of a whole different era in meteorology.

Decades ago, the tracking of explosively released radioactive particles in the atmosphere was a major topic in meteorology–see for example, this Journal of Meteorology paper (“Airborne Measurement of Atomic Particles”) that Les Machta et al. based on bomb tests in Nevada in 1956. (In 1992 in BAMS Machta later told the story of how meteorological trajectory analysis helped scientists identify the date and place of the first Soviet nuclear tests.)

One finds papers in the AMS archive about fallout dispersion, atmospheric waves, and other effects of nuclear explosions. Of course, radioactive particles in the atmosphere were not only of interest for health and national security reasons. These particles were excellent tracers for studies of then-poorly understood atmospheric properties like jet-stream and tropopause dynamics.

In 1955 there was even a paper in Monthly Weather Review by D. Lee Harris refuting a then-popular notion that rising counts of tornadoes in the United States were caused by nuclear weapons tests. However, since 1963 most nations have pledged to ban atmospheric, underwater, and space-based nuclear weapons tests, stemming the flow of research projects (but not the inexorable rise of improving tornado counts).

While the initial flurry of meteorological work spurred by the Atomic Age inevitably slowed down (a spate of Nuclear Winter questions aside), the earthquake and tsunami in Japan will undoubtedly shake loose new demands on geophysical scientists, and maybe dredge up a few old topics as well.

Hot Off the Presses

Today marks the release of a new AMS publication, Economic and Societal Impacts of Tornadoes. The book examines data on tornadoes and tornado casualties through the eyes of a pair of economists, Kevin Simmons and Daniel Sutter, whose personal experiences in the May 3-4 1999 Oklahoma tornadoes led to a project that explored ways to minimize tornado casualties. The book culminates more than 10 years of research. (At the Annual Meeting in Seattle, the authors talked to The Front Page about their new book.)

Today marks the release of a new AMS publication, Economic and Societal Impacts of Tornadoes. The book examines data on tornadoes and tornado casualties through the eyes of a pair of economists, Kevin Simmons and Daniel Sutter, whose personal experiences in the May 3-4 1999 Oklahoma tornadoes led to a project that explored ways to minimize tornado casualties. The book culminates more than 10 years of research. (At the Annual Meeting in Seattle, the authors talked to The Front Page about their new book.)

Check out the online bookstore to purchase this and other AMS titles.

Here Comes the Sun–All 360 Degrees

The understanding and forecasting of space weather could take great steps forward with the help of NASA’s Solar Terrestrial Relations Observatory (STEREO) mission, which recently captured the first-ever images taken simultaneously from opposite sides of the sun. NASA launched two STEREO probes in October of 2006, and on February 6 they finally reached their positions 180 degrees apart from each other, where they could each photograph half of the sun. The STEREO probes are tuned to wavelengths of extreme ultraviolet radiation that will allow them to monitor such solar activity as sunspots, flares, tsunamis, and magnetic filaments, and the probes’ positioning means that this activity will never be hidden, so storms originating from the far side of the sun will no longer be a surprise. The 360-degree views will also facilitate the study of other solar phenomenon, such as the possibility that solar eruptions on opposite sides of the sun can gain intensity by feeding off each other. The NASA clip below includes video of the historic 360-degree view.

Neutralizing Some of the Language in Global Warming Discussions

By Keith Seitter, Executive Director, AMS

The topic of anthropogenic global warming has become so polarized it is now hard to talk about it without what amounts to name-calling entering into the discussion. In blogs, e-mails, and published opinion pieces, terms like “deniers” and “contrarians” are leveled in one direction while “warmist” and “alarmist” are leveled in the other. Both the scientific community and broader society have much to gain from respectful dialog among those of opposing views on climate change, but a reasonable discussion on the science is unlikely if we cannot find non-offensive terminology for those who have taken positions different than our own.

As Peggy Lemone mentioned in a Front Page post last week, some months ago, the CMOS Bulletin reprinted a paper originally published in the Proceeding of the National Academy of Sciences by Anderegg et al. that simply used the terms “convinced” and “unconvinced” to describe those who had been convinced by the evidence that anthropogenic climate change was occurring and those who had not been convinced. This terminology helps in a number of ways. First and foremost, it does not carry with it the baggage of value judgment, since for any particular scientific argument there is no intrinsically positive or negative connotation associated with being either convinced or unconvinced. In addition, this terminology highlights that we are talking about a scientific, evidence-based, issue that should be resolved through logical reasoning and not something that should be decided by our inherent belief system. (And for that reason, I work very hard to avoid saying someone does or does not “believe” in global warming, or similar phrases.)

The sense I have gotten is that those who do not feel that human influence is causing the global temperatures to rise would prefer to be called “skeptics.” However, I have tried to avoid using this term as a label for those individuals. Skepticism is a cornerstone upon which science is built. All of us who have been trained as scientists should be skeptics with respect to all scientific issues — demanding solid evidence for a hypothesis or claim before accepting it, and rejecting any position if the evidence makes it clear that it cannot be correct (even if it had, in the past, been well-accepted by the broader community).

I have seen some pretty egregious cases of individuals who call themselves climate change skeptics accepting claims that support their position with little or no documented evidence while summarily dismissing the results of carefully replicated studies that do not. On the other side, I have seen cases of climate scientists who have swept aside reasonable counter hypotheses as irrelevant, or even silly, without giving them proper consideration. Neither situation represents the way a truly skeptical scientist should behave. All of us in the community should expect better.

We will not be able to have substantive discussions on the many facets of climate change if we spend so much time and energy in name-calling. And we really need to have substantive discussion if we are going to serve the public in a reasonable way as a community. Thus, it is imperative that we find some terminology that allows a person’s position on climate change to be expressed without implied, assumed, or imposed value judgments.

There may be other neutral terms that can be applied to those engaged in the climate change discussion, but “convinced” and “unconvinced” are the best I have seen so far. I have adopted this terminology in the hope of reducing some of the polarization in the discussion.

New from AMS: Joanne Simpson Mentorship Award

The AMS has introduced a new award in recognition of the career-long dedication and commitment to the advancement of women in the atmospheric sciences by legendary pioneering meteorologist Joanne Simpson.

The new Joanne Simpson Mentorship Award recognizes individuals in academia, government, or the private sector, who, over a substantial period of time, have provided outstanding and inspiring mentorship of professional colleagues or students. The award is separate from the honor bestowed upon exceptional teachers mentoring students, which is covered by the AMS Teaching Excellence Award.

Simpson was the first women to earn a Ph.D. in meteorology, and her distinguished achievements include creating nearly singlehandedly the discipline of cloud studies, determining the source of heat energy that drives hurricanes, and leading a decade-long effort at NASA that culminated in establishment of the ground-breaking Tropical Rainfall Measuring Mission (TRMM). Her career spanned more than 50 years, during which she challenged the male-dominated establishment in meteorology and fought for equal footing for women in the sciences. Although she passed away nearly a year ago, her enduring spirit continues to pave a pathway forward for other women pioneers in meteorology and the related sciences.

As with all AMS awards, nominations for the Joanne Simpson Mentorship Award are considered by the AMS Awards Oversight Committee.

Online submission of nominations for the new award will be accepted until May 1. The first Joanne Simpson Mentorship Award will be presented at the 2012 Annual Meeting in New Orleans.