Through July, 2020 has been on almost the same track as 2016: the two years had the hottest first seven months in NOAA’s 141 year dataset of global surface temperatures (land and ocean combined). Since 2016 turned out to be the hottest year on record, it might seem as if this fast start puts 2020 on track to set a new record, too, or to be a near miss. NOAA says it’s already “very likely” 2020 will be among the five hottest years on record.

But in the strange reality of ever rising global temperatures, it’s not so much the first half of the year that puts 2020 on the verge of a record. It’s the underlying trend of warming: 2020 was already on the verge on New Year’s Day.

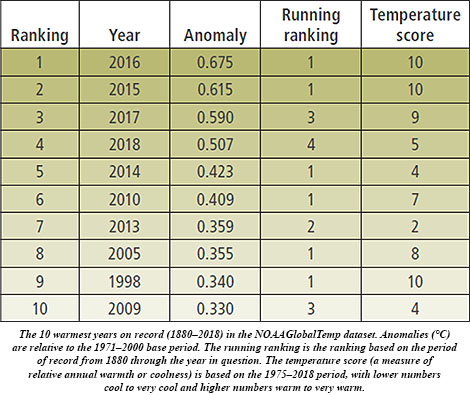

As the new State of the Climate in 2019 released last week points out, the six hottest years in the last century and a half were…exactly the last six years, 2014-2019. Due to global warming, practically every year’s surface temperature is going to be a hot one. Just by showing up at the starting line, every year is a serious threat to set a new standard.

A paper recently published in the Bulletin of the American Meteorological Society puts this relentless streak of rising temperature expectations in terms of probabilities. There’s a greater than a 99% chance that most of the next 10 years through 2028 will be ranked among the top 10 warmest.

The study, by Anthony Arguez (NOAA/NCEI) et al. also finds an 82% chance that all years in the next decade will rank in the top 15 warmest years as global warming continues. Its authors suggest that record warm years are already “baked into the cake” of Earth’s global climate and that it would take “an abrupt climate shift for even a few years within the next decade to register outside the top 10 warmest years.”

To determine these odds, the researchers analyzed the monthly version of NOAA’s Merged Land Ocean Global Surface Temperature Analysis Dataset (NOAAGlobalTemp) to project annual global temperature rankings in the future. The ever-shifting expectations for global temperatures render the usual way of keeping tabs on the data—by comparing to 30-year normals—inadequate. So Arguez et al. formulated a new way to compare each year to surrounding years:

We introduce a “temperature score” to help NOAA communicate the coolness or warmth of a given year relative to the long-term trend. We believe this is the first such projected ranking and temperature score currently produced operationally. Our objective is to use this tool to improve the communication of climate change impacts to the general public.

The temperature score from 1 (a very cold year) to 10 (very warm) is useful to distinguish between warmer and colder years relative to the long-term trend. As examples, the authors note that 2008 and 2011 were considerably cooler than surrounding years and below the overall trend, whereas 1998 and 2016 were not only the warmest years on record but were also notably warmer than surrounding years.

The temperature score from 1 (a very cold year) to 10 (very warm) is useful to distinguish between warmer and colder years relative to the long-term trend. As examples, the authors note that 2008 and 2011 were considerably cooler than surrounding years and below the overall trend, whereas 1998 and 2016 were not only the warmest years on record but were also notably warmer than surrounding years.

The study only includes average annual global temperatures through 2018. But as reported in the annual State of the Climate, 2019 ranks as the second or third warmest year on record (depending on your favored dataset), adding another year to the recent string of those warmer than any years back to the mid 1800s. The report notes that each successive decade since 1980 has been warmer than the previous. Arguez’ research suggests that not only will this continue but it will worsen dramatically.

This is a testament to the exceptional warmth experienced over the last few decades, punctuated by the last [5] years [2015–19], which have separated themselves from “the pack.”

We asked Arguez a few questions (more found in the latest print/digital issue of BAMS) about this work as well as about his background and what sparked his interest in meteorology.

BAMS: What would you like readers to learn from your study of record global temperatures?

BAMS: What would you like readers to learn from your study of record global temperatures?

Anthony Arguez: I would like the general public to know that there is not a great deal of suspense that most years—if not all—over the next decade will likely register as top 10 years. In fact, the data suggest we should expect this, as it would likely take a pretty abrupt change to get us off this trajectory.

BAMS: How did you become interested in finding new ways to analyze the global temperatures and their trend?

AA: I feel like I’ve been staring at the annual global temperature time series continually over the past 15 years or so because it is just so interesting in many ways. I find it challenging and rewarding to develop methods to translate volumes of data into answers to specific questions posed by the general public. I’ve drawn inspiration from Nate Silver, whose penchant for expounding on and communicating the “signal” hidden in the “noise” informs the way I would like to see myself and fellow climate scientists communicate to the general public more effectively.

BAMS: What surprised you most in doing this work?

AA: I was very surprised that the ranking errors we found were so small! Before calculating the results, I had a gut feeling that these errors would be modest, but the mean absolute ranking error of ~2 spots a full 10 years out was well below anything I could have imagined. I clearly under-appreciated the predictability inherent in the observed upward trend when it comes to annual global temperature rankings.

BAMS: What was the biggest challenge?

AA: I think the biggest challenge we faced was that we were not aware of any similar operational products in existence (neither for projected rankings or global annual temperature scores), or of any papers that had characterized ranking errors in a similar fashion, so we were in uncharted territory to some extent.

BAMS: This isn’t the biggest climate challenge, or surprise, you’ve ever faced…

AA: I became interested in meteorology as a teenager in 1992 when Hurricane Andrew totaled my parents’ home in Miami, Florida.

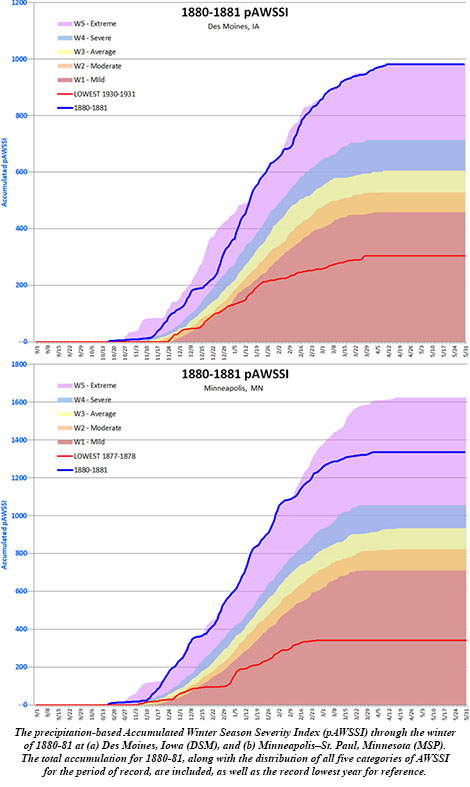

Boustead and co-authors Martha D. Shulski and Steven D. Hilberg set out to determine which parts of Laura’s stories are based in fact, and in the process, filled in the gap left by the absence of analysis or documentation in scientific literature about the Hard Winter of 1880-81. In the process, Boustead et al. show that the Hard Winter places recent severe winters, such as 2013-14, into context.

Boustead and co-authors Martha D. Shulski and Steven D. Hilberg set out to determine which parts of Laura’s stories are based in fact, and in the process, filled in the gap left by the absence of analysis or documentation in scientific literature about the Hard Winter of 1880-81. In the process, Boustead et al. show that the Hard Winter places recent severe winters, such as 2013-14, into context. BAMS asked a few questions of Boustead to gain insight into her research. A sampling of answers are below:

BAMS asked a few questions of Boustead to gain insight into her research. A sampling of answers are below: Barbara Mayes Boustead: The Long Winter research began over a decade ago as I reread the book as a “comfort read” on the tail end of a winter, reminding myself that even the longest winters do eventually end. I’ve been reading Laura Ingalls Wilder’s books since I was in elementary school, and I had always wondered if the winter was really as Wilder had described it. And then I got to thinking – I am a meteorologist, and I have the tools to look it up! The deeper I dug, the more that my questions led to more questions. I especially got excited as I found data that verified much of the weather that Wilder had described. And I knew I had found a resonant topic when I presented the work at a conference called LauraPalooza in 2010 (it’s real and it’s serious!) and was overwhelmed with questions and discussion following my presentation.

Barbara Mayes Boustead: The Long Winter research began over a decade ago as I reread the book as a “comfort read” on the tail end of a winter, reminding myself that even the longest winters do eventually end. I’ve been reading Laura Ingalls Wilder’s books since I was in elementary school, and I had always wondered if the winter was really as Wilder had described it. And then I got to thinking – I am a meteorologist, and I have the tools to look it up! The deeper I dug, the more that my questions led to more questions. I especially got excited as I found data that verified much of the weather that Wilder had described. And I knew I had found a resonant topic when I presented the work at a conference called LauraPalooza in 2010 (it’s real and it’s serious!) and was overwhelmed with questions and discussion following my presentation. In that assessment of the current literature, Tom Knutson (NOAA) and other top tropical experts reviewed a number of peer-reviewed studies and determined that a majority found the numbers of named storms actually decrease in climate projections as we move deeper into this century. But there was no consensus among the authors to either support or refute those studies since their research also showed that “there is no clear observational evidence for a detectable human influence on historical global TC frequency.”

In that assessment of the current literature, Tom Knutson (NOAA) and other top tropical experts reviewed a number of peer-reviewed studies and determined that a majority found the numbers of named storms actually decrease in climate projections as we move deeper into this century. But there was no consensus among the authors to either support or refute those studies since their research also showed that “there is no clear observational evidence for a detectable human influence on historical global TC frequency.”

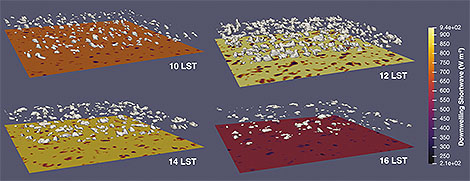

At a “Community Day,” the scientists shared their aircraft and interests with the public as well. Working together all in one place has huge benefits. The teams get to see how they compare with each other, work out the kinks with their UAS, and move faster toward their research goals. It’s one reason they are getting so good so fast.

At a “Community Day,” the scientists shared their aircraft and interests with the public as well. Working together all in one place has huge benefits. The teams get to see how they compare with each other, work out the kinks with their UAS, and move faster toward their research goals. It’s one reason they are getting so good so fast.